Public Channels

- # 3m

- # accounting

- # adm-class-action

- # admin-tip-2022-pay-plan

- # admin_blx_dailyscrum

- # admin_shield_helpdesk

- # admin_sl_tip_reconcile

- # admin_tip_contractreporting

- # admin_tip_declines-cb

- # admin_tip_declines-lv

- # admin_tip_qa_error_log_test

- # admin_tip_qa_log_test

- # admin_tip_setup_vegas

- # adult-survivors-act

- # adventist-school-abuse

- # alameda-juv-detention-abuse

- # amazon-discrimination

- # amex-black-owned-bus-discrimination

- # arizona-juvenile-hall-abuse

- # arizona-youth-treatment-center

- # asbestos-cancer

- # assault-datingapp

- # ata-mideast

- # aunt-marthas-integrated-care-abuse

- # auto-accidents

- # auto-insurance-denial

- # auto-nation-class

- # awko-inbound-return-calls

- # baby-food-autism

- # backpage-cityxguide-sex-trafficking

- # bair-hugger

- # baltimore-clergy-abuse

- # bard-powerport-catheter

- # bayview-sexual-abuse

- # belviq

- # big_brothers_sister_abuse

- # birth-injuries

- # birth-injury

- # bizdev

- # bp_camp_lejune_log

- # button-batteries

- # ca-camp-abuse

- # ca-group-home-abuse

- # ca-institute-for-women

- # ca-juvenile-hall-abuse

- # ca-life-insurance-lapse

- # california-jdc-abuse

- # call-center-tv-planning

- # camp-lejeune

- # capital-prep-school-abuse

- # carlsbad_office

- # cartiva

- # cerebral-palsy

- # chowchilla-womens-prison-abuse

- # christian_youth_assault

- # city-national-bank-discrimination

- # clergy-abuse

- # contract-litigation

- # craig-harward-joseph-weller-abuse

- # creativeproduction

- # cumberland-hospital-abuse

- # daily-decline-report

- # daily-replacement-request-report

- # dailynumbers

- # debtrelief

- # defective-idle-stop-lawsuit

- # depo-provera

- # dr-barry-brock-abuse

- # dr-bradford-bomba-abuse

- # dr-derrick-todd-abuse

- # dr-hadden-abuse

- # dr-oumair-aejaz-abuse

- # dr-scott-lee-abuse

- # dublin-correctional-institute-abuse

- # dubsmash-video-privacy

- # e-sign_alerts

- # elmiron

- # equitable-financial-life

- # erbs-palsy

- # eric-norman-olsen-ca-school-abuse

- # ertc

- # estate-planning

- # ethylene-oxide

- # ezricare-eye-drops

- # farmer-loan-discrimination

- # fb-form-field-names

- # female-ny-correctional-facility-abuse

- # firefoam

- # first-responder-accidents

- # flo-app-class-rep

- # food_poisoning

- # ford-lincoln-sudden-engine-failure

- # form-95-daily-report

- # gaming-addiction

- # general

- # general-liability

- # general-storm-damage

- # glen-mills-school-abuse

- # globaldashboard

- # gm_oil_defect

- # gtmcontainers

- # hanna-boys-center-abuse

- # harris-county-jail-deaths

- # healthcare-data-breach

- # herniamesh

- # hoag-hospital-abuse

- # hospital-portal-privacy

- # hudsons-bay-high-school-abuse

- # hurricane-damage

- # illinois-clergy-abuse

- # illinois-foster-care-abuse

- # illinois-juv-hall-abuse

- # illinois-youth-treatment-abuse

- # instant-soup-cup-child-burns

- # intake-daily-disposition-report

- # internal-fb-accounts

- # intuit-privacy-violations

- # ivc

- # jehovahs-witness-church-abuse

- # jrotc-abuse

- # kanakuk-kamps-abuse

- # kansas-juv-detention-center-abuse

- # kansas-juvenile-detention-center-yrtc-abuse

- # kentucky-organ-donor-investigation

- # knee_implant_cement

- # la-county-foster-care-abuse

- # la-county-juv-settlement

- # la-wildfires

- # la_wildfires_fba_organic

- # lausd-abuse

- # law-runle-intake-questions

- # leadspedia

- # liquid-destroyed-embryo

- # los-padrinos-juv-hall-abuse

- # maclaren-hall-abuse

- # maryland-female-cx-abuse

- # maryland-juv-hall-abuse

- # maryland-school-abuse

- # maui-lahaina-wildfires

- # mcdonogh-school-abuse

- # medical-mal-cancer

- # medical-malpractice

- # mercedes-class-action

- # metal-hip

- # michigan-catholic-clergy-abuse

- # michigan-foster-care-abuse

- # michigan-juv-hall-abuse

- # michigan-pollution

- # michigan-youth-treatment-abuse

- # milestone-tax-solutons

- # moreno-valley-pastor-abuse

- # mormon-church-abuse

- # mortgage-race-lawsuit

- # muslim-bank-discrimination

- # mva

- # nec

- # nevada-juvenile-hall-abuse

- # new-jersey-juvenile-detention-abuse

- # nh-juvenile-abuse

- # non-english-speaking-callers

- # northwell-sleep-center

- # nursing-home-abuse

- # nursing-home-abuse-litigation

- # ny-presbyterian-abuse

- # ny-urologist-abuse

- # nyc-private-school-abuse

- # onstar-drive-tracking

- # operations-project-staffing-needs

- # organic-tampon-lawsuit

- # otc-decongenstant-cases

- # oxbryta

- # ozempic

- # paragard

- # paraquat

- # patreon-video-privacy-lawsuit

- # patton-hospital-abuse

- # peloton-library-class-action

- # pfas-water-contamination

- # pixels

- # polinsky-childrens-center-abuse

- # pomona-high-school-abuse

- # popcorn-machine-status-

- # porterville-developmental-center-ab

- # posting-specs

- # ppp-fraud-whistleblower

- # prescription-medication-overpayment

- # principal-financial-401k-lawsuit

- # private-boarding-school-abuse

- # probate

- # psychiatric-sexual-abuse

- # redlands-school-sex-abuse

- # rent-increase-lawsuit

- # riceberg-sex-abuse

- # riverside-juvenile-detention-abuse

- # rosemead-highschool-abuse

- # roundup

- # royal-rangers-abuse

- # sacramento-juvenile-detention-abuse

- # salon-professional-bladder-cancer

- # san-bernardiino-juv-detention-abuse

- # san-diego-juvenile-detention-yrtc-abuse

- # santa-clara-juv-detention-abuse

- # services_blx_dl_log

- # services_blx_seo

- # services_blx_sitedev_pixels

- # shared_shield_legal

- # shield_orders_copper

- # shieldclientservices

- # shimano-crank-set-recall

- # sjs

- # social-media-teen-harm

- # socialengagement

- # southern-baptist-church-abuse

- # southwest-airlines-discrimination

- # st-helens-sexual-abuse

- # state-farm-denial

- # student-athlete-photo-hacking

- # suboxone-dental-injuries

- # tasigna

- # tepezza-hearing-loss

- # tesla-discrimination

- # texas-youth-facilities-abuse

- # thacher-school-abuse

- # the-hill-video-privacy

- # tip-tracking-number-config

- # total-loss-insurance

- # tough-mudder-illness

- # transvaginal-mesh

- # trinity-private-school-abuse

- # trucking-accidents

- # truvada

- # twominutetopicsdevs

- # tylenol-autism

- # type-2-child-diabetes

- # uber_lyft_assault

- # uhc-optum-change-databreach

- # university-of-chicago-discrimination

- # usa-consumer-network

- # valsartan

- # vertical_fields

- # video-gaming-sextortion

- # west-virginia-hernia-repair

- # wood-pellet-lawsuits

- # wordpress

- # workers-compensation

- # wtc_cancer

- # youth-treatment-center-abuse

Private Channels

- 🔒 2fa

- 🔒 account_services

- 🔒 acts-integrations

- 🔒 acv-gummy

- 🔒 admin-tip-invoicing

- 🔒 admin_activity

- 🔒 admin_blx_account_key

- 🔒 admin_blx_account_mgmt

- 🔒 admin_blx_analytics

- 🔒 admin_blx_bizdev

- 🔒 admin_blx_bizdev_audits

- 🔒 admin_blx_bizdev_sales

- 🔒 admin_blx_callcenter

- 🔒 admin_blx_callcetner_dispos

- 🔒 admin_blx_dailyfinancials

- 🔒 admin_blx_finance

- 🔒 admin_blx_invoices

- 🔒 admin_blx_lead_dispo

- 🔒 admin_blx_library

- 🔒 admin_blx_moments_ai

- 🔒 admin_blx_office

- 🔒 admin_shield_analytics

- 🔒 admin_shield_callcenter

- 🔒 admin_shield_callcenter_bills

- 🔒 admin_shield_commissions

- 🔒 admin_shield_contract-pacing

- 🔒 admin_shield_finance

- 🔒 admin_shield_finance_temp

- 🔒 admin_shield_google

- 🔒 admin_shield_google_leads

- 🔒 admin_shield_integrations

- 🔒 admin_shield_isa-sc_log

- 🔒 admin_shield_isl-mb_log

- 🔒 admin_shield_isl-rev_log

- 🔒 admin_shield_isl-svc_log

- 🔒 admin_shield_library

- 🔒 admin_shield_pmo

- 🔒 admin_shield_sales_ops

- 🔒 admin_shield_unsold-leads

- 🔒 admin_tip_analytics

- 🔒 admin_tip_casecriteria

- 🔒 admin_tip_corpdev

- 🔒 admin_tip_dialer

- 🔒 admin_tip_finance

- 🔒 admin_tip_formation

- 🔒 admin_tip_hiring

- 🔒 admin_tip_integrations

- 🔒 admin_tip_lawruler

- 🔒 admin_tip_library

- 🔒 admin_tip_logistics

- 🔒 admin_tip_services

- 🔒 advisor-connect-life

- 🔒 ai-development-team

- 🔒 airbnb-assault

- 🔒 arcads-support

- 🔒 badura-management-team

- 🔒 bailey-glasser-integrations

- 🔒 bcl-integration-coordination

- 🔒 blx-social-media-teen-harm

- 🔒 boysgirlsclubs-abuse

- 🔒 bp_camp_lejune_metrics

- 🔒 brandlytics-maxfusion

- 🔒 brandmentaility

- 🔒 brittanys-birthday-surprise

- 🔒 business-intelligence

- 🔒 business_intelligence_dev_team

- 🔒 call_leads_dispos

- 🔒 camp-lejeune-gwpb

- 🔒 camp_lejeune_organic

- 🔒 campaign_alerts

- 🔒 clo

- 🔒 commits

- 🔒 conference-planning

- 🔒 content-dev-requests

- 🔒 contract-float-postings

- 🔒 cpap-recall

- 🔒 cust_blx_alg

- 🔒 cust_blx_bcl

- 🔒 cust_blx_bcl_ext

- 🔒 cust_blx_bcl_intake_cc_ext

- 🔒 cust_blx_bcl_sachs_ext

- 🔒 cust_blx_bdl

- 🔒 cust_blx_br

- 🔒 cust_blx_brandlytics

- 🔒 cust_blx_ccghealth

- 🔒 cust_blx_dl

- 🔒 cust_blx_fls

- 🔒 cust_blx_glo

- 🔒 cust_blx_jgw

- 🔒 cust_blx_losg

- 🔒 cust_blx_rwz

- 🔒 cust_blx_sok

- 🔒 cust_blx_som

- 🔒 cust_blx_som_pr_ext

- 🔒 cust_blx_spl

- 🔒 d-is-for-data

- 🔒 dacthal

- 🔒 darrow-disposition_report

- 🔒 data-dictionary

- 🔒 datastan

- 🔒 deploys

- 🔒 dev_shield_lcp

- 🔒 developers

- 🔒 devereaux-abuse

- 🔒 devops

- 🔒 dicello-flatirons_coordination

- 🔒 dl-client-escalations

- 🔒 dwight-maximus

- 🔒 exactech-recall

- 🔒 external-shieldlegal-mediamath

- 🔒 exxon-discrimination

- 🔒 final-video-ads

- 🔒 firefoam_organic

- 🔒 five9-project-team

- 🔒 flatirons-coordination

- 🔒 focus_groups

- 🔒 form-integrations

- 🔒 gilman_schools_abuse

- 🔒 gilman_schools_abuse_organic

- 🔒 google-accounts-transition-to-shieldlegal_dot_com

- 🔒 google-ads-conversions

- 🔒 google_media_buying_general

- 🔒 group-messages

- 🔒 hair-relaxer-cancer

- 🔒 hair_relaxer_organic

- 🔒 help-desk

- 🔒 help-desk-google-alerts

- 🔒 help-desk-integrations

- 🔒 help-desk-old

- 🔒 help-desk-security

- 🔒 hiring

- 🔒 hiring_bizdev

- 🔒 howard_county_abuse

- 🔒 howard_county_abuse_organic

- 🔒 human-trafficking

- 🔒 il_clergy_abuse_organic

- 🔒 il_juvenile_hall_organic

- 🔒 il_school_abuse_organic

- 🔒 illinois-juv-hall-abuse-bg-tv

- 🔒 illinois-juv-hall-abuse-dl-tv

- 🔒 illinois-juv-hall-abuse-sss-tv

- 🔒 innovation

- 🔒 intakes-and-fieldmaps

- 🔒 integrations

- 🔒 integrations-qa

- 🔒 integrations-team

- 🔒 integrations_team

- 🔒 internaltraffic

- 🔒 invoicing

- 🔒 la-county-settlements

- 🔒 la-juv-hall-abuse-tv

- 🔒 lawruler_dev

- 🔒 m-a-g

- 🔒 md-juv-hall-abuse-bg-tv

- 🔒 md-juv-hall-abuse-dl-tv

- 🔒 md-juv-hall-abuse-sss-tv

- 🔒 md-juvenile-hall-abuse-organic

- 🔒 md_clergy_organic

- 🔒 md_key_schools_organic

- 🔒 md_school_abuse_organic

- 🔒 meadow-integrations

- 🔒 media_buyers

- 🔒 michigan_clergy_abuse_organic

- 🔒 ml_tip_university

- 🔒 monday_reporting

- 🔒 mtpr-creative

- 🔒 mva_tx_lsa_bcl

- 🔒 national-sex-abuse-organic

- 🔒 national_sex_abuse_sil_organic

- 🔒 new_orders_shield

- 🔒 newcampaignsetup

- 🔒 nicks-notes

- 🔒 nitido-meta-integrations

- 🔒 nitido_fbpmd_capi

- 🔒 northwestern-sports-abuse

- 🔒 northwestern-university-abuse-organic

- 🔒 nv_auto_accidents

- 🔒 ny-presbyterian-organic

- 🔒 ogintake

- 🔒 ops-administrative

- 🔒 ops_shield_lcp

- 🔒 overthecap

- 🔒 paqfixes

- 🔒 pennsylvania-juvenile-hall-abuse

- 🔒 pixels_server

- 🔒 pointbreak_analytics

- 🔒 pointbreak_enrollments

- 🔒 pointbreak_operations

- 🔒 predictive-model-platform

- 🔒 privacy-requests

- 🔒 proj-leadflow

- 🔒 reporting_automations

- 🔒 return-requests

- 🔒 rev-update-anthony

- 🔒 rev-update-caleb

- 🔒 rev-update-hamik

- 🔒 rev-update-jack

- 🔒 rev-update-john

- 🔒 rev-update-kris

- 🔒 rev-update-main

- 🔒 rev-update-maximus

- 🔒 runcloud

- 🔒 sales-commissions

- 🔒 security

- 🔒 services_blx_creatives

- 🔒 services_blx_leadspedia

- 🔒 services_blx_mediabuying

- 🔒 services_blx_mediabuying_disputes

- 🔒 services_blx_sitedev

- 🔒 services_blx_socialmedia

- 🔒 shield-dash-pvs

- 🔒 shield-legal_fivetran

- 🔒 shield_daily_revenue

- 🔒 shield_pvs_reports

- 🔒 shield_tv_callcenter_ext

- 🔒 shieldclientdashboards

- 🔒 sl-data-team

- 🔒 sl_sentinel_openjar_admin_docs

- 🔒 sl_sentinel_openjar_admin_general

- 🔒 sl_sentinel_openjar_admin_orders

- 🔒 sl_sentinel_openjar_birthinjury

- 🔒 sl_sentinel_openjar_cartiva

- 🔒 sl_sentinel_openjar_clergyabuse

- 🔒 sl_sentinel_openjar_nec

- 🔒 social-media-organic-and-paid

- 🔒 social-media-postback

- 🔒 som_miami_reg

- 🔒 statistics-and-reporting

- 🔒 stats-and-reporting-announcements

- 🔒 stock-fraud

- 🔒 strategy-and-operations

- 🔒 sunscreen

- 🔒 super-pod

- 🔒 surrogacy

- 🔒 talc

- 🔒 testing-media-spend-notification

- 🔒 the-apartment

- 🔒 the-blog-squad-v2

- 🔒 the-condo

- 🔒 tik-tok-video-production

- 🔒 tiktok_media_ext

- 🔒 tip-connect

- 🔒 tip-connect-notes

- 🔒 tip-director-commission

- 🔒 tip-organic-linkedin

- 🔒 tip_new_campaign_requests-6638

- 🔒 tip_pricing_commissions

- 🔒 tip_reporting

- 🔒 tip_services_customer_shieldlegal

- 🔒 training-recordings

- 🔒 truerecovery-dev

- 🔒 tv_media

- 🔒 university-abuse-organic

- 🔒 video-production

- 🔒 video_content_team

- 🔒 vmi-abuse

- 🔒 wordpress-mgmt

- 🔒 xeljanz

- 🔒 zantac

Direct Messages

- 👤 Mike Everhart , Mike Everhart

- 👤 Mike Everhart , slackbot

- 👤 slackbot , slackbot

- 👤 Google Drive , slackbot

- 👤 Jack Altunyan , Jack Altunyan

- 👤 Mike Everhart , Jack Altunyan

- 👤 Jack Altunyan , slackbot

- 👤 Mike Everhart , Google Calendar

- 👤 brandon , brandon

- 👤 Marc Barmazel , Marc Barmazel

- 👤 Marc Barmazel , slackbot

- 👤 Ben Brown , Ben Brown

- 👤 Cameron Rentch , slackbot

- 👤 sean , sean

- 👤 Cameron Rentch , Cameron Rentch

- 👤 sean , Cameron Rentch

- 👤 Erik Gratz , slackbot

- 👤 Google Drive , Cameron Rentch

- 👤 Cameron Rentch , Ryan Vaspra

- 👤 Jack Altunyan , Cameron Rentch

- 👤 Ryan Vaspra , slackbot

- 👤 Ryan Vaspra , Ryan Vaspra

- 👤 Mike Everhart , Cameron Rentch

- 👤 sean , Ryan Vaspra

- 👤 Maximus , slackbot

- 👤 Cameron Rentch , Maximus

- 👤 Mike Everhart , Ryan Vaspra

- 👤 Maximus , Maximus

- 👤 Ryan Vaspra , Maximus

- 👤 Jack Altunyan , Maximus

- 👤 Mike Everhart , Maximus

- 👤 Cameron Rentch , john

- 👤 Ryan Vaspra , john

- 👤 john , slackbot

- 👤 Mike Everhart , john

- 👤 Maximus , john

- 👤 Mark Hines , slackbot

- 👤 Mark Hines , Mark Hines

- 👤 Mike Everhart , Mark Hines

- 👤 Ryan Vaspra , Mark Hines

- 👤 Maximus , Mark Hines

- 👤 Cameron Rentch , Mark Hines

- 👤 Maximus , slackbot

- 👤 Google Calendar , Ryan Vaspra

- 👤 deactivateduser717106 , deactivateduser717106

- 👤 Jack Altunyan , Ryan Vaspra

- 👤 Quint , slackbot

- 👤 Intraday_Sold_Leads_Microservice , slackbot

- 👤 james barlow , slackbot

- 👤 Ryan Vaspra , Intraday_Sold_Leads_Microservice

- 👤 Jack Altunyan , Mark Hines

- 👤 Hamik Akhverdyan , slackbot

- 👤 john , Intraday_Sold_Leads_Microservice

- 👤 Mark Hines , Hamik Akhverdyan

- 👤 Maximus , Intraday_Sold_Leads_Microservice

- 👤 Jack Altunyan , Intraday_Sold_Leads_Microservice

- 👤 Devin Dubin , Devin Dubin

- 👤 Devin Dubin , slackbot

- 👤 Hailie Lane , slackbot

- 👤 SLacktopus , slackbot

- 👤 john , Mark Hines

- 👤 Chris , slackbot

- 👤 Ryan Vaspra , Chris

- 👤 TIP Signed Contracts Alert , slackbot

- 👤 Cameron Rentch , Chris

- 👤 Tony , slackbot

- 👤 Tony , Tony

- 👤 Cameron Rentch , Tony

- 👤 Ryan Vaspra , Tony

- 👤 ryan , slackbot

- 👤 Marc Barmazel , Ryan Vaspra

- 👤 Brian Hirst , slackbot

- 👤 Maximus , Brian Hirst

- 👤 Ryan Vaspra , Brian Hirst

- 👤 Tony , Brian Hirst

- 👤 Mike Everhart , Brian Hirst

- 👤 john , Brian Hirst

- 👤 Cameron Rentch , Brian Hirst

- 👤 Brian Hirst , Brian Hirst

- 👤 Caleb Peters , Caleb Peters

- 👤 john , Caleb Peters

- 👤 Caleb Peters , slackbot

- 👤 Brian Hirst , Caleb Peters

- 👤 Cameron Rentch , Caleb Peters

- 👤 Ryan Vaspra , Caleb Peters

- 👤 Intraday_Sold_Leads_Microservice , Caleb Peters

- 👤 Kelly C. Richardson, EdS , slackbot

- 👤 Cameron Rentch , Kelly C. Richardson, EdS

- 👤 Maximus , Caleb Peters

- 👤 Kelly C. Richardson, EdS , Kelly C. Richardson, EdS

- 👤 Marc Barmazel , Cameron Rentch

- 👤 john , Kelly C. Richardson, EdS

- 👤 Mike Everhart , Fabian De Simone

- 👤 Ryan Vaspra , Kelly C. Richardson, EdS

- 👤 Cameron Rentch , Fabian De Simone

- 👤 Mark Hines , Kelly C. Richardson, EdS

- 👤 Mark Hines , Caleb Peters

- 👤 Mark Hines , Brian Hirst

- 👤 Mike Everhart , Caleb Peters

- 👤 Ian Knight , slackbot

- 👤 Kris Standley , slackbot

- 👤 Cameron Rentch , Kris Standley

- 👤 Kris Standley , Kris Standley

- 👤 Google Calendar , Tony

- 👤 Ryan Vaspra , Loom

- 👤 Sabrina Da Silva , slackbot

- 👤 Jack Altunyan , Brian Hirst

- 👤 Google Drive , Kris Standley

- 👤 Cameron Rentch , Andy Rogers

- 👤 Andy Rogers , slackbot

- 👤 Andy Rogers , Andy Rogers

- 👤 Ryan Vaspra , Andy Rogers

- 👤 Luis Cortes , Luis Cortes

- 👤 Luis Cortes , slackbot

- 👤 Hamik Akhverdyan , slackbot

- 👤 sean , Andy Rogers

- 👤 David Temmesfeld , slackbot

- 👤 Cameron Rentch , Dorshan Millhouse

- 👤 Mike Everhart , Chris

- 👤 Dorshan Millhouse , Dorshan Millhouse

- 👤 Dorshan Millhouse , slackbot

- 👤 Chris , Dorshan Millhouse

- 👤 Google Calendar , David Temmesfeld

- 👤 David Temmesfeld , David Temmesfeld

- 👤 Ryan Vaspra , Dorshan Millhouse

- 👤 David Temmesfeld , Dorshan Millhouse

- 👤 Natalie Nguyen , slackbot

- 👤 Chris , David Temmesfeld

- 👤 Mark Hines , Andy Rogers

- 👤 Rahat Yasir , slackbot

- 👤 Mike Everhart , Tony

- 👤 Caleb Peters , David Temmesfeld

- 👤 brettmichael , slackbot

- 👤 luke , luke

- 👤 greg , slackbot

- 👤 Ryan Vaspra , luke

- 👤 luke , slackbot

- 👤 Brian Hirst , luke

- 👤 Mike Everhart , luke

- 👤 sean , greg

- 👤 Allen Gross , slackbot

- 👤 Jon Pascal , slackbot

- 👤 Ryan Vaspra , brettmichael

- 👤 Google Drive , brettmichael

- 👤 Brittany Barnett , Brittany Barnett

- 👤 LaToya Palmer , LaToya Palmer

- 👤 LaToya Palmer , slackbot

- 👤 luke , LaToya Palmer

- 👤 Brittany Barnett , slackbot

- 👤 Ryan Vaspra , LaToya Palmer

- 👤 Mike Everhart , LaToya Palmer

- 👤 Mark Hines , LaToya Palmer

- 👤 Kelly C. Richardson, EdS , LaToya Palmer

- 👤 Maximus , luke

- 👤 Dealbot by Pipedrive , slackbot

- 👤 Google Drive , LaToya Palmer

- 👤 craig , slackbot

- 👤 Brian Hirst , Andy Rogers

- 👤 Ryan Vaspra , craig

- 👤 Tony , John Gillespie

- 👤 Kyle Chung , Kyle Chung

- 👤 Cameron Rentch , craig

- 👤 John Gillespie , connor

- 👤 Google Drive , Kelly C. Richardson, EdS

- 👤 Google Drive , connor

- 👤 Tony , Andy Rogers

- 👤 Google Calendar , Andy Rogers

- 👤 Brian Hirst , Loom

- 👤 Andy Rogers , luke

- 👤 Andy Rogers , LaToya Palmer

- 👤 carl , carl

- 👤 Google Drive , Brittany Barnett

- 👤 Caleb Peters , luke

- 👤 Google Calendar , Mark Hines

- 👤 Google Calendar , sean

- 👤 Google Calendar , Maximus

- 👤 Brian Hirst , LaToya Palmer

- 👤 Google Calendar , Brian Hirst

- 👤 Ryan Vaspra , deleted-U04KC9L4HRB

- 👤 Cameron Rentch , LaToya Palmer

- 👤 Adrienne Hester , slackbot

- 👤 Ryan Vaspra , Adrienne Hester

- 👤 Adrienne Hester , Adrienne Hester

- 👤 Mike Everhart , Adrienne Hester

- 👤 LaToya Palmer , Adrienne Hester

- 👤 Mike Everhart , Andy Rogers

- 👤 Andy Rogers , Adrienne Hester

- 👤 Kelly C. Richardson, EdS , Adrienne Hester

- 👤 Maximus , Andy Rogers

- 👤 Ryan Vaspra , connor

- 👤 Mark Hines , Adrienne Hester

- 👤 Chris , Adrienne Hester

- 👤 Cameron Rentch , Adrienne Hester

- 👤 Mark Hines , Loom

- 👤 Google Drive , Andy Rogers

- 👤 Cameron Rentch , deleted-U04GZ79CPNG

- 👤 Mike Everhart , deleted-U056TJH4KUL

- 👤 luke , Mark Maniora

- 👤 Mark Hines , Mark Maniora

- 👤 Kelly C. Richardson, EdS , Mark Maniora

- 👤 Mark Maniora , slackbot

- 👤 Mark Maniora , Mark Maniora

- 👤 LaToya Palmer , Mark Maniora

- 👤 Cameron Rentch , Mark Maniora

- 👤 Mike Everhart , Mark Maniora

- 👤 sean , craig

- 👤 Ryan Vaspra , Mark Maniora

- 👤 Adrienne Hester , Mark Maniora

- 👤 Brian Hirst , Mark Maniora

- 👤 Tony , deleted-U04HFGHL4RW

- 👤 robert , slackbot

- 👤 Cameron Rentch , deleted-U05DY7UGM2L

- 👤 Mike Everhart , Joe Santana

- 👤 Google Calendar , Joe Santana

- 👤 deleted-U04KC9L4HRB , Mark Maniora

- 👤 Joe Santana , Joe Santana

- 👤 Joe Santana , slackbot

- 👤 sean , luke

- 👤 Dorshan Millhouse , Adrienne Hester

- 👤 Mike Everhart , deleted-U04GZ79CPNG

- 👤 Ryan Vaspra , deleted-U05DY7UGM2L

- 👤 Ryan Vaspra , deleted-U05CPGEJRD3

- 👤 Ryan Vaspra , deleted-UPH0C3A9K

- 👤 Adrienne Hester , deleted-U059JKB35RU

- 👤 Tony , Mark Maniora

- 👤 Dwight Thomas , slackbot

- 👤 Dwight Thomas , Dwight Thomas

- 👤 Mike Everhart , Dwight Thomas

- 👤 Tony , craig

- 👤 LaToya Palmer , Dwight Thomas

- 👤 Ryan Vaspra , Dwight Thomas

- 👤 Mark Maniora , Dwight Thomas

- 👤 Ryan Vaspra , deleted-U05EFH3S2TA

- 👤 Rose Fleming , slackbot

- 👤 Andy Rogers , deleted-U05DY7UGM2L

- 👤 Cameron Rentch , ian

- 👤 Tony Jones , Nicholas McFadden

- 👤 Greg Owen , slackbot

- 👤 Mike Everhart , Nicholas McFadden

- 👤 Nicholas McFadden , Nicholas McFadden

- 👤 Cameron Rentch , Nicholas McFadden

- 👤 Ryan Vaspra , Nicholas McFadden

- 👤 Tony , Nicholas McFadden

- 👤 deleted-U04HFGHL4RW , Greg Owen

- 👤 Brian Hirst , Nicholas McFadden

- 👤 Tony , Greg Owen

- 👤 Mark Maniora , Nicholas McFadden

- 👤 Mike Everhart , Greg Owen

- 👤 Andy Rogers , Greg Owen

- 👤 Kris Standley , David Temmesfeld

- 👤 Justin Troyer , slackbot

- 👤 Nicholas McFadden , slackbot

- 👤 luke , Greg Owen

- 👤 deleted-U05EFH3S2TA , Nicholas McFadden

- 👤 Mike Everhart , deleted-U05DY7UGM2L

- 👤 deleted-U04GZ79CPNG , Greg Owen

- 👤 Joe Santana , Nicholas McFadden

- 👤 Cameron Rentch , Rose Fleming

- 👤 Cameron Rentch , deleted-U04R23BEW3V

- 👤 deleted-U055HQT39PC , Mark Maniora

- 👤 Google Calendar , Caleb Peters

- 👤 Brian Hirst , Greg Owen

- 👤 Google Calendar , Greg Owen

- 👤 Google Calendar , greg

- 👤 Brian Hirst , deleted-U055HQT39PC

- 👤 Google Calendar , luke

- 👤 Google Calendar , Nicholas McFadden

- 👤 Google Calendar , Devin Dubin

- 👤 Google Calendar , kelly840

- 👤 Google Calendar , Dorshan Millhouse

- 👤 Tony , debora

- 👤 Google Calendar , brettmichael

- 👤 debora , slackbot

- 👤 Ryan Vaspra , Julie-Margaret Johnson

- 👤 debora , debora

- 👤 Google Calendar , Kris Standley

- 👤 Google Calendar , Luis Cortes

- 👤 Google Calendar , Brittany Barnett

- 👤 LaToya Palmer , debora

- 👤 Google Calendar , debora

- 👤 Brian Hirst , Dwight Thomas

- 👤 Mark Maniora , Greg Owen

- 👤 Julie-Margaret Johnson , Julie-Margaret Johnson

- 👤 Julie-Margaret Johnson , slackbot

- 👤 LaToya Palmer , Julie-Margaret Johnson

- 👤 Google Calendar , Dwight Thomas

- 👤 deleted-U05M3DME3GR , debora

- 👤 Adrienne Hester , Julie-Margaret Johnson

- 👤 Ryan Vaspra , Rose Fleming

- 👤 Adrienne Hester , Greg Owen

- 👤 Dwight Thomas , Julie-Margaret Johnson

- 👤 luke , debora

- 👤 Ryan Vaspra , debora

- 👤 Cameron Rentch , Julie-Margaret Johnson

- 👤 LaToya Palmer , deleted-U04GZ79CPNG

- 👤 deleted-U04GU9EUV9A , debora

- 👤 Andy Rogers , debora

- 👤 Greg Owen , Julie-Margaret Johnson

- 👤 LaToya Palmer , Greg Owen

- 👤 Adrienne Hester , debora

- 👤 korbin , slackbot

- 👤 Adrienne Hester , korbin

- 👤 Mark Hines , debora

- 👤 Maximus , Julie-Margaret Johnson

- 👤 Tony , Dwight Thomas

- 👤 Google Drive , Dwight Thomas

- 👤 Ryan Vaspra , Greg Owen

- 👤 Cameron Rentch , korbin

- 👤 Kelly C. Richardson, EdS , Julie-Margaret Johnson

- 👤 Mike Everhart , korbin

- 👤 Maximus , David Temmesfeld

- 👤 Ryan Vaspra , korbin

- 👤 Andy Rogers , Dwight Thomas

- 👤 LaToya Palmer , korbin

- 👤 Jack Altunyan , debora

- 👤 debora , Nayeli Salmeron

- 👤 luke , korbin

- 👤 debora , deleted-U061HNPF4H5

- 👤 Chris , debora

- 👤 Dwight Thomas , Quint Underwood

- 👤 Jack Altunyan , Quint Underwood

- 👤 craig , debora

- 👤 Ryan Vaspra , Quint Underwood

- 👤 Andy Rogers , korbin

- 👤 Lee Zafra , slackbot

- 👤 Dwight Thomas , Lee Zafra

- 👤 Quint Underwood , slackbot

- 👤 Lee Zafra , Lee Zafra

- 👤 LaToya Palmer , Lee Zafra

- 👤 Mark Maniora , Joe Santana

- 👤 Adrienne Hester , Lee Zafra

- 👤 Julie-Margaret Johnson , Lee Zafra

- 👤 Jack Altunyan , Dwight Thomas

- 👤 LaToya Palmer , deleted-U055HQT39PC

- 👤 Cameron Rentch , Lee Zafra

- 👤 Ryan Vaspra , Lee Zafra

- 👤 Maximus , Dorshan Millhouse

- 👤 Mike Everhart , michaelbrunet

- 👤 Cameron Rentch , michaelbrunet

- 👤 Mark Hines , Dwight Thomas

- 👤 Dwight Thomas , Nicholas McFadden

- 👤 Ryan Vaspra , michaelbrunet

- 👤 Google Calendar , James Scott

- 👤 korbin , Lee Zafra

- 👤 Cameron Rentch , debora

- 👤 Caleb Peters , Dorshan Millhouse

- 👤 deleted-U05DY7UGM2L , James Scott

- 👤 Cameron Rentch , James Scott

- 👤 James Scott , James Scott

- 👤 Ryan Vaspra , James Scott

- 👤 Marc Barmazel , James Scott

- 👤 Mike Everhart , freshdesk_legacy

- 👤 James Scott , slackbot

- 👤 Nsay Y , slackbot

- 👤 Ryan Vaspra , Nsay Y

- 👤 sean , debora

- 👤 Brian Hirst , Nsay Y

- 👤 LaToya Palmer , deleted-U05CTUBCP7E

- 👤 Cameron Rentch , Nsay Y

- 👤 Andy Rogers , Nsay Y

- 👤 Cameron Rentch , Michael McKinney

- 👤 Maximus , Nsay Y

- 👤 Ryan Vaspra , Michael McKinney

- 👤 Jack Altunyan , Dorshan Millhouse

- 👤 Dwight Thomas , Michael McKinney

- 👤 Michael McKinney , Michael McKinney

- 👤 Jack Altunyan , Michael McKinney

- 👤 Kris Standley , Nsay Y

- 👤 Michael McKinney , slackbot

- 👤 debora , Nsay Y

- 👤 Mike Everhart , Michael McKinney

- 👤 Intraday_Sold_Leads_Microservice , Quint Underwood

- 👤 Greg Owen , debora

- 👤 Maximus , Dwight Thomas

- 👤 Altonese Neely , slackbot

- 👤 Cameron Rentch , Altonese Neely

- 👤 debora , Altonese Neely

- 👤 Cameron Rentch , brian580

- 👤 debora , brian580

- 👤 Ryan Vaspra , brian580

- 👤 Ryan Vaspra , Altonese Neely

- 👤 Jack Altunyan , Altonese Neely

- 👤 deleted-U04HFGHL4RW , Adrienne Hester

- 👤 brian580 , slackbot

- 👤 Adrienne Hester , Altonese Neely

- 👤 Nicholas McFadden , James Scott

- 👤 Greg Owen , deleted-U06AYDQ4WVA

- 👤 Caleb Peters , Dwight Thomas

- 👤 Cameron Rentch , rachel

- 👤 Google Drive , rachel

- 👤 Mark Hines , Joe Santana

- 👤 Caleb Peters , Lee Zafra

- 👤 Dwight Thomas , Greg Owen

- 👤 deleted-U05DY7UGM2L , Nicholas McFadden

- 👤 Julie-Margaret Johnson , korbin

- 👤 Brian Hirst , James Scott

- 👤 Andy Rogers , deleted-U05CTUBCP7E

- 👤 Maximus , Mark Maniora

- 👤 Mark Hines , Lee Zafra

- 👤 deleted-U04GU9EUV9A , Adrienne Hester

- 👤 luke , craig

- 👤 luke , Nicholas McFadden

- 👤 korbin , deleted-U06AYDQ4WVA

- 👤 Intraday_Sold_Leads_Microservice , Michael McKinney

- 👤 Nicholas McFadden , Greg Owen

- 👤 John Gillespie , debora

- 👤 deleted-U04KC9L4HRB , brian580

- 👤 Adrienne Hester , Dwight Thomas

- 👤 Cameron Rentch , parsa

- 👤 Google Calendar , parsa

- 👤 Google Calendar , patsy

- 👤 parsa , slackbot

- 👤 Cameron Rentch , deleted-U067A4KAB9U

- 👤 Cameron Rentch , patsy

- 👤 LaToya Palmer , deleted-U05CXRE6TBK

- 👤 Adrienne Hester , patsy

- 👤 Mike Everhart , parsa

- 👤 Greg Owen , korbin

- 👤 deleted-U055HQT39PC , Nicholas McFadden

- 👤 deleted-U04GU9EUV9A , Julie-Margaret Johnson

- 👤 LaToya Palmer , deleted-U069G4WMMD4

- 👤 Dorshan Millhouse , Michael McKinney

- 👤 Adrienne Hester , deleted-U069G4WMMD4

- 👤 Dorshan Millhouse , parsa

- 👤 deleted-U055HQT39PC , korbin

- 👤 Mike Everhart , deleted-U04GU9EUV9A

- 👤 Kelly C. Richardson, EdS , korbin

- 👤 Cameron Rentch , Greg Owen

- 👤 Mark Maniora , deleted-U06AYDQ4WVA

- 👤 Dwight Thomas , deleted-U06AYDQ4WVA

- 👤 Ryan Vaspra , deleted-U0679HSE82K

- 👤 deleted-U056TJH4KUL , Dwight Thomas

- 👤 Andy Rogers , Mark Maniora

- 👤 Kelly C. Richardson, EdS , Altonese Neely

- 👤 Greg Owen , deleted-U06C7A8PVLJ

- 👤 Andy Rogers , Nicholas McFadden

- 👤 Andy Rogers , patsy

- 👤 deleted-U04GZ79CPNG , korbin

- 👤 Mike Everhart , Kelly C. Richardson, EdS

- 👤 Mark Maniora , debora

- 👤 brian580 , Altonese Neely

- 👤 deleted-U061HNPF4H5 , Nayeli Salmeron

- 👤 Julie-Margaret Johnson , patsy

- 👤 Mark Hines , Michael McKinney

- 👤 Tony , Lee Zafra

- 👤 deleted-U055HQT39PC , Dwight Thomas

- 👤 Adrienne Hester , deleted-U055HQT39PC

- 👤 ian , debora

- 👤 Google Calendar , darrell

- 👤 Ryan Vaspra , deleted-U04TA486SM6

- 👤 LaToya Palmer , deleted-U042MMWVCH2

- 👤 LaToya Palmer , deleted-U059JKB35RU

- 👤 Nicholas McFadden , darrell

- 👤 darrell , darrell

- 👤 darrell , slackbot

- 👤 Cameron Rentch , darrell

- 👤 Tony , Altonese Neely

- 👤 Google Drive , luke

- 👤 Brian Hirst , darrell

- 👤 Chris , brian580

- 👤 Mike Everhart , deleted-U06N28XTLE7

- 👤 luke , brian580

- 👤 Ryan Vaspra , Joe Santana

- 👤 deleted-U04R23BEW3V , Nicholas McFadden

- 👤 Ryan Vaspra , darrell

- 👤 sean , patsy

- 👤 Ryan Vaspra , patsy

- 👤 Greg Owen , brian580

- 👤 craig , brian580

- 👤 Mike Everhart , myjahnee739

- 👤 Greg Owen , patsy

- 👤 Kelly C. Richardson, EdS , deleted-U05DY7UGM2L

- 👤 luke , James Scott

- 👤 Mark Maniora , Julie-Margaret Johnson

- 👤 Maximus , Chris

- 👤 luke , patsy

- 👤 Andy Rogers , brian580

- 👤 Nicholas McFadden , Nick Ward

- 👤 Nick Ward , Nick Ward

- 👤 brian580 , patsy

- 👤 Nick Ward , slackbot

- 👤 Jack Altunyan , luke

- 👤 Google Drive , darrell

- 👤 myjahnee739 , Nick Ward

- 👤 deleted-U04GU9EUV9A , brian580

- 👤 Mark Maniora , patsy

- 👤 deleted-U05CTUBCP7E , Dwight Thomas

- 👤 Tony , brian580

- 👤 darrell , Nick Ward

- 👤 Google Calendar , deactivateduser168066

- 👤 Mark Maniora , deleted-U05DY7UGM2L

- 👤 deactivateduser168066 , deactivateduser168066

- 👤 Mike Everhart , ahsan

- 👤 Google Calendar , ahsan

- 👤 Joe Santana , ahsan

- 👤 Ryan Vaspra , Nick Ward

- 👤 Dwight Thomas , ahsan

- 👤 ahsan , slackbot

- 👤 Andy Rogers , deleted-U055HQT39PC

- 👤 deleted-U055HQT39PC , Greg Owen

- 👤 John Gillespie , deleted-U06HGJHJWQJ

- 👤 Nicholas McFadden , deactivateduser168066

- 👤 ahsan , ahsan

- 👤 craig , patsy

- 👤 monday.com , ahsan

- 👤 Ryan Vaspra , deactivateduser168066

- 👤 deactivateduser168066 , ahsan

- 👤 Joe Santana , Nick Ward

- 👤 Cameron Rentch , Nick Ward

- 👤 Mark Hines , brian580

- 👤 Google Calendar , David Evans

- 👤 Jack Altunyan , David Temmesfeld

- 👤 Nick Ward , deactivateduser168066

- 👤 Julie-Margaret Johnson , David Evans

- 👤 Mike Everhart , deactivateduser168066

- 👤 Cameron Rentch , deleted-U06C7A8PVLJ

- 👤 Maximus , ahsan

- 👤 Tony Jones , Adrienne Hester

- 👤 Malissa Phillips , slackbot

- 👤 Google Calendar , Malissa Phillips

- 👤 Greg Owen , Malissa Phillips

- 👤 Tony Jones , Greg Owen

- 👤 deleted-U04HFGHL4RW , Malissa Phillips

- 👤 Cameron Rentch , Malissa Phillips

- 👤 LaToya Palmer , ahsan

- 👤 Mark Maniora , Malissa Phillips

- 👤 David Evans , deactivateduser21835

- 👤 Google Calendar , deactivateduser21835

- 👤 Nicholas McFadden , Malissa Phillips

- 👤 Tony Jones , Malissa Phillips

- 👤 Andy Rogers , Malissa Phillips

- 👤 Nick Ward , Malissa Phillips

- 👤 Dwight Thomas , patsy

- 👤 Ryan Vaspra , Malissa Phillips

- 👤 Malissa Phillips , Malissa Phillips

- 👤 Cameron Rentch , Ralecia Marks

- 👤 Adrienne Hester , David Evans

- 👤 Mike Everhart , Nick Ward

- 👤 michaelbrunet , Ralecia Marks

- 👤 Nick Ward , Ralecia Marks

- 👤 Nicholas McFadden , ahsan

- 👤 deleted-U06N28XTLE7 , Malissa Phillips

- 👤 deleted-U055HQT39PC , Malissa Phillips

- 👤 Dwight Thomas , Nick Ward

- 👤 Brian Hirst , Nick Ward

- 👤 Aidan Celeste , slackbot

- 👤 deleted-U055HQT39PC , Nick Ward

- 👤 Mark Maniora , darrell

- 👤 Nsay Y , brian580

- 👤 Mark Maniora , Nick Ward

- 👤 ian , Mark Maniora

- 👤 brian580 , Malissa Phillips

- 👤 Joe Santana , Malissa Phillips

- 👤 korbin , deleted-U069G4WMMD4

- 👤 Aidan Celeste , Aidan Celeste

- 👤 Nick Ward , ahsan

- 👤 Google Drive , Nick Ward

- 👤 craig , Malissa Phillips

- 👤 deleted-U05CTUBCP7E , korbin

- 👤 LaToya Palmer , Nicholas McFadden

- 👤 patsy , Malissa Phillips

- 👤 Nicholas McFadden , Julie-Margaret Johnson

- 👤 Ryan Vaspra , GitHub

- 👤 LaToya Palmer , David Evans

- 👤 craig , Greg Owen

- 👤 Ryan Vaspra , ahsan

- 👤 deleted-U04G7CGMBPC , brian580

- 👤 Tony , Nsay Y

- 👤 deleted-U05DY7UGM2L , Lee Zafra

- 👤 Greg Owen , Nick Ward

- 👤 Google Drive , ahsan

- 👤 Greg Owen , ahsan

- 👤 luke , deleted-U072JS2NHRQ

- 👤 Dwight Thomas , deleted-U06N28XTLE7

- 👤 Cameron Rentch , David Evans

- 👤 deleted-U05DY7UGM2L , Nick Ward

- 👤 Ryan Vaspra , deleted-U072JS2NHRQ

- 👤 deleted-U04GZ79CPNG , Malissa Phillips

- 👤 Mike Everhart , Malissa Phillips

- 👤 deleted-U055HQT39PC , ahsan

- 👤 deleted-U072JS2NHRQ , ahsan

- 👤 Mark Hines , korbin

- 👤 Chris , Andy Rogers

- 👤 Greg Owen , David Evans

- 👤 Brian Hirst , ahsan

- 👤 Dwight Thomas , Malissa Phillips

- 👤 Luis Cortes , deleted-U055HQT39PC

- 👤 Melanie Macias , slackbot

- 👤 craig , David Evans

- 👤 Ryan Vaspra , Tony Jones

- 👤 ahsan , Ralecia Marks

- 👤 Melanie Macias , Melanie Macias

- 👤 Andy Rogers , Melanie Macias

- 👤 Cameron Rentch , Melanie Macias

- 👤 LaToya Palmer , Melanie Macias

- 👤 Nicholas McFadden , Melanie Macias

- 👤 Brittany Barnett , Melanie Macias

- 👤 Chris , Melanie Macias

- 👤 Google Calendar , Melanie Macias

- 👤 Ryan Vaspra , Melanie Macias

- 👤 Adrienne Hester , Melanie Macias

- 👤 David Evans , Melanie Macias

- 👤 deleted-U06AYDQ4WVA , Malissa Phillips

- 👤 Julie-Margaret Johnson , Melanie Macias

- 👤 luke , Malissa Phillips

- 👤 Luis Cortes , deleted-U06KK7QLM55

- 👤 darrell , Malissa Phillips

- 👤 Maximus , deleted-U04R23BEW3V

- 👤 Tony , deleted-U055HQT39PC

- 👤 Nick Ward , Anthony Soboleski

- 👤 Luis Cortes , deleted-U04HFGHL4RW

- 👤 Anthony Soboleski , Anthony Soboleski

- 👤 deleted-U06C7A8PVLJ , ahsan

- 👤 Cameron Rentch , deleted-U06N28XTLE7

- 👤 Caleb Peters , craig

- 👤 deleted-U04R23BEW3V , Malissa Phillips

- 👤 Nick Ward , Daniel Schussler

- 👤 Greg Owen , Melanie Macias

- 👤 Daniel Schussler , Daniel Schussler

- 👤 Nicholas McFadden , Daniel Schussler

- 👤 ahsan , Malissa Phillips

- 👤 David Evans , Malissa Phillips

- 👤 Daniel Schussler , slackbot

- 👤 Mark Maniora , Daniel Schussler

- 👤 Google Calendar , Daniel Schussler

- 👤 Mark Maniora , ahsan

- 👤 Dwight Thomas , Daniel Schussler

- 👤 ahsan , Daniel Schussler

- 👤 Ryan Vaspra , deleted-U06GD22CLDC

- 👤 Lee Zafra , Melanie Macias

- 👤 Andy Rogers , David Evans

- 👤 Mike Everhart , Daniel Schussler

- 👤 Maximus , ahsan

- 👤 Dwight Thomas , darrell

- 👤 Malissa Phillips , Sean Marshall

- 👤 Melanie Macias , Jennifer Durnell

- 👤 Dustin Surwill , Dustin Surwill

- 👤 Google Calendar , Jennifer Durnell

- 👤 deleted-U069G4WMMD4 , Melanie Macias

- 👤 Joe Santana , Jennifer Durnell

- 👤 Google Calendar , Dustin Surwill

- 👤 Nicholas McFadden , Dustin Surwill

- 👤 Nick Ward , Dustin Surwill

- 👤 Dwight Thomas , Dustin Surwill

- 👤 Jennifer Durnell , Jennifer Durnell

- 👤 Mark Maniora , Melanie Macias

- 👤 Dustin Surwill , slackbot

- 👤 Joe Santana , Dustin Surwill

- 👤 Cameron Rentch , Joe Santana

- 👤 Brian Hirst , Malissa Phillips

- 👤 Nicholas McFadden , deleted-U06NZP17L83

- 👤 deleted-U05QUSWUJA2 , Greg Owen

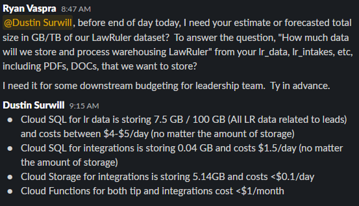

- 👤 Ryan Vaspra , Dustin Surwill

- 👤 Daniel Schussler , Dustin Surwill

- 👤 James Turner , Zekarias Haile

- 👤 Dustin Surwill , James Turner

- 👤 Daniel Schussler , James Turner

- 👤 ahsan , Dustin Surwill

- 👤 Google Calendar , Zekarias Haile

- 👤 Nicholas McFadden , James Turner

- 👤 Malissa Phillips , Daniel Schussler

- 👤 James Turner , slackbot

- 👤 Nick Ward , James Turner

- 👤 Dwight Thomas , Zekarias Haile

- 👤 Mark Maniora , James Turner

- 👤 Nick Ward , Zekarias Haile

- 👤 Google Calendar , craig630

- 👤 Zekarias Haile , Zekarias Haile

- 👤 James Turner , James Turner

- 👤 Daniel Schussler , Zekarias Haile

- 👤 Mark Maniora , Zekarias Haile

- 👤 ahsan , Zekarias Haile

- 👤 ahsan , James Turner

- 👤 Ryan Vaspra , David Evans

- 👤 Andy Rogers , James Scott

- 👤 Zekarias Haile , slackbot

- 👤 Google Calendar , James Turner

- 👤 Ryan Vaspra , James Turner

- 👤 Anthony Soboleski , James Turner

- 👤 Adrienne Hester , Joe Santana

- 👤 darrell , Anthony Soboleski

- 👤 darrell , Dustin Surwill

- 👤 MediaBuyerTools_TEST , slackbot

- 👤 Ralecia Marks , James Turner

- 👤 Google Calendar , jsantana707

- 👤 Maximus , brian580

- 👤 Dustin Surwill , Zekarias Haile

- 👤 Malissa Phillips , James Turner

- 👤 deleted-U05DY7UGM2L , brian580

- 👤 Nicholas McFadden , Zekarias Haile

- 👤 Rose Fleming , Michelle Cowan

- 👤 deleted-U05DY7UGM2L , Dwight Thomas

- 👤 James Turner , sian

- 👤 Brian Hirst , deleted-U05DY7UGM2L

- 👤 deleted-U04R23BEW3V , Dustin Surwill

- 👤 Dwight Thomas , James Turner

- 👤 deleted-U04GZ79CPNG , Nicholas McFadden

- 👤 deleted-U05CTUBCP7E , James Turner

- 👤 deleted-U055HQT39PC , James Turner

- 👤 Dorshan Millhouse , Melanie Macias

- 👤 Mike Everhart , Dustin Surwill

- 👤 sean , Malissa Phillips

- 👤 darrell , ahsan

- 👤 deleted-U055HQT39PC , Daniel Schussler

- 👤 Mark Hines , Malissa Phillips

- 👤 Joe Santana , Dwight Thomas

- 👤 deleted-U04GZ79CPNG , Joe Santana

- 👤 Sean Marshall , James Turner

- 👤 deleted-U05CTUBCP7E , Nicholas McFadden

- 👤 deleted-U04GZ79CPNG , James Turner

- 👤 Joe Santana , Michelle Cowan

- 👤 sean , brian580

- 👤 deleted-U06C7A8PVLJ , Zekarias Haile

- 👤 deleted-U055HQT39PC , Dustin Surwill

- 👤 Cameron Rentch , Dustin Surwill

- 👤 Kelly C. Richardson, EdS , brian580

- 👤 Google Calendar , Lee Zafra

- 👤 Brian Hirst , James Turner

- 👤 Henri Engle , slackbot

- 👤 Beau Kallas , slackbot

- 👤 Amanda Hembree , Amanda Hembree

- 👤 craig , James Turner

- 👤 David Temmesfeld , Melanie Macias

- 👤 James Turner , Amanda Hembree

- 👤 James Turner , Beau Kallas

- 👤 VernonEvans , slackbot

- 👤 James Turner , Henri Engle

- 👤 Yan Ashrapov , Yan Ashrapov

- 👤 deleted-U05496RCQ9M , Daniel Schussler

- 👤 Aidan Celeste , Zekarias Haile

- 👤 darrell , Daniel Schussler

- 👤 Amanda Hembree , slackbot

- 👤 James Turner , VernonEvans

- 👤 Yan Ashrapov , slackbot

- 👤 deleted-U04GZ79CPNG , Dustin Surwill

- 👤 Google Calendar , Yan Ashrapov

- 👤 Malissa Phillips , Dustin Surwill

- 👤 deleted-U06C7A8PVLJ , Malissa Phillips

- 👤 Joe Santana , Yan Ashrapov

- 👤 Maximus , Yan Ashrapov

- 👤 Caleb Peters , Yan Ashrapov

- 👤 Brian Hirst , Dustin Surwill

- 👤 Ryan Vaspra , Zekarias Haile

- 👤 Jack Altunyan , Yan Ashrapov

- 👤 Maximus , Melanie Macias

- 👤 deleted-U06F9N9PB2A , Malissa Phillips

- 👤 Google Drive , Yan Ashrapov

- 👤 brian580 , Yan Ashrapov

- 👤 Joe Santana , James Turner

- 👤 Cameron Rentch , Yan Ashrapov

- 👤 Dustin Surwill , sian

- 👤 Dorshan Millhouse , Yan Ashrapov

- 👤 Mark Maniora , Dustin Surwill

- 👤 Melanie Macias , Yan Ashrapov

- 👤 deleted-U06GD22CLDC , Malissa Phillips

- 👤 Cameron Rentch , James Turner

- 👤 Adrienne Hester , James Turner

- 👤 James Scott , James Turner

- 👤 Dorshan Millhouse , Jennifer Durnell

- 👤 Ryan Vaspra , Yan Ashrapov

- 👤 deleted-U06AYDQ4WVA , Daniel Schussler

- 👤 deleted-U04GZ79CPNG , Daniel Schussler

- 👤 deleted-U05496RCQ9M , Dwight Thomas

- 👤 Joe Santana , Zekarias Haile

- 👤 Aidan Celeste , James Turner

- 👤 Aidan Celeste , Dustin Surwill

- 👤 Nsay Y , ahsan

- 👤 Quint , James Turner

- 👤 craig , Nsay Y

- 👤 Nicholas McFadden , deleted-U06F9N9PB2A

- 👤 Malissa Phillips , Melanie Macias

- 👤 Malissa Phillips , Zekarias Haile

- 👤 David Temmesfeld , Yan Ashrapov

- 👤 Greg Owen , Zekarias Haile

- 👤 brian580 , James Turner

- 👤 Nsay Y , Jennifer Durnell

- 👤 IT SUPPORT - Dennis Shaull , slackbot

- 👤 Luis Cortes , Nicholas McFadden

- 👤 Google Calendar , IT SUPPORT - Dennis Shaull

- 👤 Cameron Rentch , Jennifer Durnell

- 👤 James Scott , Nsay Y

- 👤 deleted-U04TA486SM6 , Dustin Surwill

- 👤 Brian Hirst , Zekarias Haile

- 👤 darrell , James Turner

- 👤 deleted-U06GD22CLDC , James Turner

- 👤 Kelly C. Richardson, EdS , Melanie Macias

- 👤 deleted-U05F548NU5V , Yan Ashrapov

- 👤 Kelly C. Richardson, EdS , Lee Zafra

- 👤 deleted-U062D2YJ15Y , James Turner

- 👤 deleted-U06VDQGHZLL , James Turner

- 👤 Yan Ashrapov , deleted-U084U38R8DN

- 👤 David Evans , Sean Marshall

- 👤 Serebe , slackbot

- 👤 Ryan Vaspra , Serebe

- 👤 LaToya Palmer , Jennifer Durnell

- 👤 James Scott , brian580

- 👤 Aidan Celeste , IT SUPPORT - Dennis Shaull

- 👤 Greg Owen , deleted-U07SBM5MB32

- 👤 deleted-U06C7A8PVLJ , Dustin Surwill

- 👤 Mark Hines , IT SUPPORT - Dennis Shaull

- 👤 darrell , Zekarias Haile

- 👤 Lee Zafra , Malissa Phillips

- 👤 Dustin Surwill , IT SUPPORT - Dennis Shaull

- 👤 Mark Maniora , IT SUPPORT - Dennis Shaull

- 👤 LaToya Palmer , IT SUPPORT - Dennis Shaull

- 👤 Mike Everhart , Yan Ashrapov

- 👤 Joe Santana , darrell

- 👤 deleted-U05G9BHC4GY , Yan Ashrapov

- 👤 Joe Santana , Greg Owen

- 👤 Nicholas McFadden , Nsay Y

- 👤 deleted-U05CTUBCP7E , Daniel Schussler

- 👤 brian580 , IT SUPPORT - Dennis Shaull

- 👤 darrell , IT SUPPORT - Dennis Shaull

- 👤 Nsay Y , darrell

- 👤 Nicholas McFadden , Yan Ashrapov

- 👤 deleted-U04TA486SM6 , Malissa Phillips

- 👤 Joe Santana , deleted-U06VDQGHZLL

- 👤 deleted-U072B7ZV4SH , ahsan

- 👤 Joe Santana , Daniel Schussler

- 👤 Greg Owen , Dustin Surwill

- 👤 deleted-U05QUSWUJA2 , Dustin Surwill

- 👤 greg , brian580

- 👤 Melanie Macias , Daniel Schussler

- 👤 Malissa Phillips , sian

- 👤 deleted-U07097NJDA7 , Nick Ward

- 👤 James Scott , Jehoshua Josue

- 👤 Google Calendar , Jehoshua Josue

- 👤 deleted-U055HQT39PC , Zekarias Haile

- 👤 deleted-U06C7A8PVLJ , wf_bot_a0887aydgeb

- 👤 deleted-U05DY7UGM2L , Melanie Macias

- 👤 Brian Hirst , Yan Ashrapov

- 👤 Cameron Rentch , deleted-U06GD22CLDC

- 👤 Joe Santana , Jehoshua Josue

- 👤 Jehoshua Josue , Jehoshua Josue

- 👤 deleted-U04HFGHL4RW , Joe Santana

- 👤 James Scott , Dustin Surwill

- 👤 ahsan , Edward Weber

- 👤 Rose Fleming , Malissa Phillips

- 👤 Greg Owen , James Turner

- 👤 Daniel Schussler , Jehoshua Josue

- 👤 Greg Owen , deleted-U083HCG9AUR

- 👤 James Scott , Nick Ward

- 👤 Nicholas McFadden , Jehoshua Josue

- 👤 LaToya Palmer , deleted-U06K957RUGY

- 👤 deleted-U04HFGHL4RW , James Turner

- 👤 Rose Fleming , brian580

- 👤 deleted-U06CVB2MHRN , Dustin Surwill

- 👤 deleted-U04TA486SM6 , Nicholas McFadden

- 👤 Brian Hirst , Joe Santana

- 👤 deleted-U04GG7BCZ5M , Malissa Phillips

- 👤 ahsan , Jehoshua Josue

- 👤 deleted-U06F9N9PB2A , ahsan

- 👤 Nicholas McFadden , Jennifer Durnell

- 👤 deleted-U04R23BEW3V , James Turner

- 👤 deleted-U05DY7UGM2L , James Turner

- 👤 Dustin Surwill , deleted-U08E306T7SM

- 👤 ahsan , sian

- 👤 Mark Maniora , Lee Zafra

- 👤 Maximus , Nicholas McFadden

- 👤 Google Drive , James Scott

- 👤 deleted-U026DSD2R1B , Dorshan Millhouse

- 👤 Brian Hirst , Rose Fleming

- 👤 Malissa Phillips , deleted-U083HCG9AUR

- 👤 Malissa Phillips , IT SUPPORT - Dennis Shaull

- 👤 Jennifer Durnell , sian

- 👤 LaToya Palmer , Nick Ward

- 👤 James Scott , Zekarias Haile

- 👤 deleted-U04TA486SM6 , Rose Fleming

- 👤 deleted-U04GZ79CPNG , Rose Fleming

- 👤 deleted-U06F9N9PB2A , Dustin Surwill

- 👤 deleted-U06C7A8PVLJ , Jehoshua Josue

- 👤 Caleb Peters , deleted-U04R23BEW3V

- 👤 Greg Owen , deleted-U08E306T7SM

- 👤 Rose Fleming , James Turner

- 👤 ahsan , GitHub

- 👤 deleted-U072B7ZV4SH , Dustin Surwill

- 👤 deleted-U06GD22CLDC , ahsan

- 👤 deleted-U06CVB2MHRN , Zekarias Haile

- 👤 Dustin Surwill , deleted-U08FJ290LUX

- 👤 Kelly C. Richardson, EdS , John Gillespie

- 👤 Greg Owen , Lee Zafra

- 👤 David Evans , Kimberly Hubbard

- 👤 James Turner , Jehoshua Josue

- 👤 Cameron Rentch , Jehoshua Josue

- 👤 Google Calendar , john

- 👤 Dwight Thomas , Yan Ashrapov

- 👤 Joe Santana , IT SUPPORT - Dennis Shaull

- 👤 Daniel Schussler , deleted-U07PQ4H089G

- 👤 deleted-U05EFH3S2TA , Rose Fleming

- 👤 Nsay Y , Amanda Hembree

- 👤 Nick Ward , Welcome message

- 👤 deleted-U06C7A8PVLJ , Welcome message

- 👤 sean , Melanie Macias

- 👤 deleted-U04GG7BCZ5M , Dustin Surwill

- 👤 deleted-U06GD22CLDC , Welcome message

- 👤 Zekarias Haile , Jehoshua Josue

- 👤 Andy Rogers , Rose Fleming

- 👤 deleted-U059JKB35RU , Lee Zafra

- 👤 Maximus , deleted-U05F548NU5V

- 👤 Mark Maniora , Yan Ashrapov

- 👤 Nicholas McFadden , Edward Weber

- 👤 Mark Hines , Yan Ashrapov

- 👤 Dustin Surwill , Jehoshua Josue

- 👤 Luis Cortes , deleted-U04R23BEW3V

- 👤 deleted-U06C7A8PVLJ , darrell

- 👤 Cameron Rentch , deleted-U05G9BGQFBJ

- 👤 James Turner , deleted-U08E306T7SM

- 👤 Nicholas McFadden , Lee Zafra

- 👤 Nicholas McFadden , deleted-U07SBM5MB32

- 👤 Rose Fleming , Zekarias Haile

- 👤 Dustin Surwill , deleted-U07PQ4H089G

- 👤 deleted-U05EFH3S2TA , James Turner

- 👤 deleted-U06C7A8PVLJ , Daniel Schussler

- 👤 Google Calendar , CC Kitanovski

- 👤 Malissa Phillips , CC Kitanovski

- 👤 Richard Schnitzler , Richard Schnitzler

- 👤 Nick Ward , Jehoshua Josue

- 👤 Cameron Rentch , CC Kitanovski

- 👤 deleted-U055HQT39PC , Joe Santana

- 👤 Nicholas McFadden , Richard Schnitzler

- 👤 Joe Santana , Richard Schnitzler

- 👤 Google Calendar , Richard Schnitzler

- 👤 Dustin Surwill , Richard Schnitzler

- 👤 Daniel Schussler , Richard Schnitzler

- 👤 Greg Owen , deleted-U08FJ290LUX

- 👤 Nicholas McFadden , CC Kitanovski

- 👤 Ryan Vaspra , Daniel Schussler

- 👤 deleted-U06F9N9PB2A , CC Kitanovski

- 👤 Joe Santana , David Evans

- 👤 Kelly C. Richardson, EdS , Joe Woods

- 👤 Sean Marshall , Dustin Surwill

- 👤 Jira , Daniel Schussler

- 👤 Maximus , James Turner

- 👤 deleted-U05QUSWUJA2 , James Turner

- 👤 Nick Ward , Richard Schnitzler

- 👤 Jira , Richard Schnitzler

- 👤 Melanie Macias , CC Kitanovski

- 👤 Anthony Soboleski , Richard Schnitzler

- 👤 James Turner , deleted-U08N0J1U7GD

- 👤 Jira , Edward Weber

- 👤 deleted-U01AJT99FAQ , Joe Santana

- 👤 Dorshan Millhouse , deleted-U08RHDRJKP0

- 👤 Lee Zafra , Nsay Y

- 👤 deleted-U05EFH3S2TA , Dustin Surwill

- 👤 Joe Santana , deleted-U05EFH3S2TA

- 👤 James Turner , deleted-U08T4FKSC04

- 👤 Jira , Dustin Surwill

- 👤 James Turner , deleted-U08FJ290LUX

- 👤 Mark Hines , James Turner

- 👤 Nicholas McFadden , Chris Krecicki

- 👤 Richard Schnitzler , Chris Krecicki

- 👤 James Scott , Chris Krecicki

- 👤 Greg Owen , Daniel Schussler

- 👤 Dwight Thomas , Richard Schnitzler

- 👤 Tyson Green , Tyson Green

- 👤 Dustin Surwill , Tyson Green

- 👤 Google Calendar , Tyson Green

- 👤 Andy Rogers , Chris Krecicki

- 👤 Dustin Surwill , Chris Krecicki

- 👤 Tyson Green , Connie Bronowitz

- 👤 Jira , Nick Ward

- 👤 Nsay Y , Connie Bronowitz

- 👤 Joe Santana , deleted-U06F9N9PB2A

- 👤 Joe Santana , Tyson Green

- 👤 Chris Krecicki , Tyson Green

- 👤 Luis Cortes , James Turner

- 👤 Andy Rogers , Joe Santana

- 👤 Edward Weber , Chris Krecicki

- 👤 Joe Santana , Chris Krecicki

- 👤 Nick Ward , Chris Krecicki

- 👤 James Turner , deleted-U08SZHALMBM

- 👤 Jehoshua Josue , Chris Krecicki

- 👤 Ryan Vaspra , Chris Krecicki

- 👤 Nicholas McFadden , Tyson Green

- 👤 Chris Krecicki , Connie Bronowitz

- 👤 Ryan Vaspra , Edward Weber

- 👤 Henri Engle , Chris Krecicki

- 👤 James Turner , Carter Matzinger

- 👤 Joe Santana , Connie Bronowitz

- 👤 Cameron Rentch , Carter Matzinger

- 👤 Carter Matzinger , Carter Matzinger

- 👤 Connie Bronowitz , Carter Matzinger

- 👤 Confluence , Richard Schnitzler

- 👤 deleted-U06F9N9PB2A , James Turner

- 👤 deleted-U04HFGHL4RW , Dustin Surwill

- 👤 Joe Santana , Carter Matzinger

- 👤 Dustin Surwill , Carter Matzinger

- 👤 Dustin Surwill , Edward Weber

- 👤 Nick Ward , Edward Weber

- 👤 Mark Maniora , Carter Matzinger

- 👤 Daniel Schussler , Carter Matzinger

- 👤 Richard Schnitzler , Carter Matzinger

- 👤 Mark Maniora , Chris Krecicki

- 👤 deleted-UCBB2GYLR , Ryan Vaspra

- 👤 Malissa Phillips , Carter Matzinger

- 👤 Brian Hirst , deleted-U07AD5U5E6S

- 👤 Confluence , Nicholas McFadden

- 👤 Confluence , Dustin Surwill

- 👤 Nicholas McFadden , Carter Matzinger

- 👤 James Turner , Chris Krecicki

- 👤 Cameron Rentch , Chris Krecicki

- 👤 Confluence , Edward Weber

- 👤 Confluence , Joe Santana

- 👤 Edward Weber , Carter Matzinger

- 👤 Jira , Zekarias Haile

- 👤 Dwight Thomas , Chris Krecicki

- 👤 deleted-U04GG7BCZ5M , Greg Owen

- 👤 David Evans , Jennifer Durnell

- 👤 Dwight Thomas , Tyson Green

- 👤 Chris Krecicki , Carter Matzinger

- 👤 deleted-U05496RCQ9M , Carter Matzinger

- 👤 Jennifer Durnell , CC Kitanovski

- 👤 Andy Rogers , Carter Matzinger

- 👤 Joe Santana , deleted-U06C7A8PVLJ

- 👤 Jehoshua Josue , Richard Schnitzler

- 👤 James Scott , CC Kitanovski

- 👤 Maximus , Rose Fleming

- 👤 Joe Santana , Amanda Hembree

- 👤 CC Kitanovski , Chris Krecicki

- 👤 Joe Santana , Kimberly Barber

- 👤 David Evans , Connie Bronowitz

- 👤 Ryan Vaspra , CC Kitanovski

- 👤 Adrienne Hester , Kimberly Barber

- 👤 Ryan Vaspra , Carter Matzinger

- 👤 James Turner , CC Kitanovski

- 👤 Dustin Surwill , Henri Engle

- 👤 deleted-U06C7A8PVLJ , Chris Krecicki

- 👤 Dustin Surwill , CC Kitanovski

- 👤 James Turner , deleted-U08LK2U7YBW

- 👤 Brian Hirst , CC Kitanovski

- 👤 Anthony Soboleski , Chris Krecicki

- 👤 Dustin Surwill , Welcome message

- 👤 Tyson Green , Kimberly Barber

- 👤 Edward Weber , Welcome message

- 👤 Nick Ward , Welcome message

- 👤 deleted-U06VDQGHZLL , Dustin Surwill

- 👤 Google Drive , Richard Schnitzler

- 👤 Jehoshua Josue , Tyson Green

- 👤 Kris Standley , Yan Ashrapov

- 👤 Andy Rogers , CC Kitanovski

- 👤 deleted-U06C7A8PVLJ , Welcome message

- 👤 Ralecia Marks , Tyson Green

- 👤 James Turner , Item status notification

- 👤 Carter Matzinger , Welcome message

- 👤 Ryan Vaspra , deleted-U042MMWVCH2

- 👤 deleted-U042MMWVCH2 , Adrienne Hester

- 👤 deleted-U059JKB35RU , Julie-Margaret Johnson

- 👤 deleted-U042MMWVCH2 , korbin

- 👤 deleted-U059JKB35RU , Melanie Macias

- 👤 deleted-U059JKB35RU , Jennifer Durnell

- 👤 Ryan Vaspra , deleted-U02JM6UC78T

- 👤 deleted-U02JM6UC78T , Kris Standley

- 👤 Joe Santana , deleted-U08N0J1U7GD

- 👤 Joe Santana , deleted-U08SZHALMBM

- 👤 deleted-U6Z58C197 , James Turner

- 👤 Ryan Vaspra , deleted-U0410U6Q8J3

- 👤 Brian Hirst , deleted-U0410U6Q8J3

- 👤 deleted-U05G9BGQFBJ , Yan Ashrapov

- 👤 Cameron Rentch , deleted-U05G9BHC4GY

- 👤 Brian Hirst , deleted-U04KC9L4HRB

- 👤 Mike Everhart , deleted-U04KC9L4HRB

- 👤 Cameron Rentch , deleted-U04KC9L4HRB

- 👤 Brian Hirst , deleted-U04HFGHL4RW

- 👤 LaToya Palmer , deleted-U04KC9L4HRB

- 👤 Cameron Rentch , deleted-U04HFGHL4RW

- 👤 Ryan Vaspra , deleted-U04GZ79CPNG

- 👤 luke , deleted-U04GZ79CPNG

- 👤 Ryan Vaspra , deleted-U04HFGHL4RW

- 👤 Brian Hirst , deleted-U04GZ79CPNG

- 👤 Andy Rogers , deleted-U04GZ79CPNG

- 👤 Brian Hirst , deleted-U04GU9EUV9A

- 👤 Mike Everhart , deleted-U04HFGHL4RW

- 👤 LaToya Palmer , deleted-U05DY7UGM2L

- 👤 Adrienne Hester , deleted-U05DY7UGM2L

- 👤 Luis Cortes , deleted-U04KC9L4HRB

- 👤 deleted-U04GZ79CPNG , Mark Maniora

- 👤 Ryan Vaspra , deleted-U05QUSWUJA2

- 👤 deleted-U04R23BEW3V , Mark Maniora

- 👤 Ryan Vaspra , deleted-U04R23BEW3V

- 👤 luke , deleted-U05R2LQKHHR

- 👤 Tony , deleted-U05M3DME3GR

- 👤 Ryan Vaspra , deleted-U055HQT39PC

- 👤 deleted-U04GZ79CPNG , Julie-Margaret Johnson

- 👤 brettmichael , deleted-U04GWKX1RKM

- 👤 Cameron Rentch , deleted-U04GU9EUV9A

- 👤 Cameron Rentch , deleted-U05EFH3S2TA

- 👤 deleted-U04GZ79CPNG , Dwight Thomas

- 👤 Mark Maniora , deleted-U05R2LQKHHR

- 👤 Ryan Vaspra , deleted-U04GU9EUV9A

- 👤 Ryan Vaspra , deleted-U06AYDQ4WVA

- 👤 Andy Rogers , deleted-U06AYDQ4WVA

- 👤 luke , deleted-U06C7A8PVLJ

- 👤 Andy Rogers , deleted-U04R23BEW3V

- 👤 luke , deleted-U06AYDQ4WVA

- 👤 deleted-U04GZ79CPNG , debora

- 👤 Tony , deleted-U06AYDQ4WVA

- 👤 John Gillespie , deleted-U06AYDQ4WVA

- 👤 Adrienne Hester , deleted-U05QUSWUJA2

- 👤 Mike Everhart , deleted-U06GKHHCDNF

- 👤 ian , deleted-U06AYDQ4WVA

- 👤 debora , deleted-U06AYDQ4WVA

- 👤 Mike Everhart , deleted-U055HQT39PC

- 👤 deleted-U04HFGHL4RW , Altonese Neely

- 👤 deleted-U04GU9EUV9A , Altonese Neely

- 👤 Andy Rogers , deleted-U04GU9EUV9A

- 👤 Adrienne Hester , deleted-U04TA486SM6

- 👤 deleted-U06AYDQ4WVA , Altonese Neely

- 👤 Nicholas McFadden , deleted-U06AYDQ4WVA

- 👤 John Gillespie , deleted-U054PFD3Q6N

- 👤 deleted-U04R23BEW3V , korbin

- 👤 deleted-U04GZ79CPNG , Adrienne Hester

- 👤 Greg Owen , deleted-U06N28XTLE7

- 👤 Nicholas McFadden , deleted-U06N28XTLE7

- 👤 Tony , deleted-U06GKHHCDNF

- 👤 Mike Everhart , deleted-U06AYDQ4WVA

- 👤 luke , deleted-U06N28XTLE7

- 👤 John Gillespie , deleted-U05D70MB62W

- 👤 deleted-U04GZ79CPNG , brian580

- 👤 Ryan Vaspra , deleted-U06N28XTLE7

- 👤 deleted-U04HFGHL4RW , brian580

- 👤 John Gillespie , deleted-U06S99AE6C9

- 👤 Luis Cortes , deleted-U072JS2NHRQ

- 👤 Greg Owen , deleted-U072JS2NHRQ

- 👤 LaToya Palmer , deleted-U06AYDQ4WVA

- 👤 Cameron Rentch , deleted-U055HQT39PC

- 👤 Cameron Rentch , deleted-U05ANA2ULDD

- 👤 Cameron Rentch , deleted-U071ST51P18

- 👤 Dwight Thomas , deleted-U05QUSWUJA2

- 👤 korbin , deleted-U06GD22CLDC

- 👤 deleted-U04HFGHL4RW , Nicholas McFadden

- 👤 deleted-U04R23BEW3V , ahsan

- 👤 deleted-U05DY7UGM2L , korbin

- 👤 Brittany Barnett , deleted-U05EFH3S2TA

- 👤 Cameron Rentch , deleted-U072JS2NHRQ

- 👤 Ryan Vaspra , deleted-U072B7ZV4SH

- 👤 Andy Rogers , deleted-U072JS2NHRQ

- 👤 Nicholas McFadden , deleted-U06C7A8PVLJ

- 👤 deleted-U05496RCQ9M , Mark Maniora

- 👤 deleted-U072JS2NHRQ , Malissa Phillips

- 👤 brian580 , deleted-U072JS2NHRQ

- 👤 Ryan Vaspra , deleted-U05PM8ZHCF4

- 👤 Mike Everhart , deleted-U05PM8ZHCF4

- 👤 deleted-U05EFH3S2TA , Vilma Farrar

- 👤 deleted-U04GZ79CPNG , Ralecia Marks

- 👤 Tony Jones , deleted-U06N28XTLE7

- 👤 Nicholas McFadden , deleted-U072B7ZV4SH

- 👤 deleted-U06NZP17L83 , Daniel Schussler

- 👤 deleted-U05EFH3S2TA , Malissa Phillips

- 👤 deleted-U06N28XTLE7 , ahsan

- 👤 deleted-U05496RCQ9M , Nick Ward

- 👤 deleted-U05496RCQ9M , James Turner

- 👤 deleted-U05496RCQ9M , Zekarias Haile

- 👤 deleted-U06NZP17L83 , James Turner

- 👤 deleted-U04GZ79CPNG , ahsan

- 👤 deleted-U06C7A8PVLJ , James Turner

- 👤 deleted-U05496RCQ9M , ahsan

- 👤 Nick Ward , deleted-U072JS2NHRQ

- 👤 deleted-U05496RCQ9M , Dustin Surwill

- 👤 deleted-U06C7A8PVLJ , Nick Ward

- 👤 deleted-U05DY7UGM2L , Malissa Phillips

- 👤 Nicholas McFadden , deleted-U072JS2NHRQ

- 👤 deleted-U072JS2NHRQ , James Turner

- 👤 Dwight Thomas , deleted-U0679HSE82K

- 👤 deleted-U05ANA2ULDD , Nick Ward

- 👤 Brian Hirst , deleted-U072JS2NHRQ

- 👤 deleted-U04HFGHL4RW , Nsay Y

- 👤 deleted-U06AYDQ4WVA , VernonEvans

- 👤 Tony , deleted-U05EFH3S2TA

- 👤 Cameron Rentch , deleted-U07JFNH9C7P

- 👤 Ryan Vaspra , deleted-U06C7A8PVLJ

- 👤 deleted-U04R23BEW3V , Yan Ashrapov

- 👤 deleted-U072JS2NHRQ , Dustin Surwill

- 👤 Dwight Thomas , deleted-U072JS2NHRQ

- 👤 brian580 , deleted-U071ST51P18

- 👤 Nsay Y , deleted-U06AYDQ4WVA

- 👤 Nicholas McFadden , deleted-U06GD22CLDC

- 👤 deleted-U05496RCQ9M , Greg Owen

- 👤 Nsay Y , deleted-U081KUX6TKM

- 👤 deleted-U05496RCQ9M , Malissa Phillips

- 👤 deleted-U05QUSWUJA2 , brian580

- 👤 deleted-U06N28XTLE7 , Dustin Surwill

- 👤 Cameron Rentch , deleted-U04TA486SM6

- 👤 Cameron Rentch , deleted-U07097NJDA7

- 👤 Dustin Surwill , deleted-U07SBM5MB32

- 👤 Cameron Rentch , deleted-U06F9N9PB2A

- 👤 deleted-U06GD22CLDC , Dustin Surwill

- 👤 Nick Ward , deleted-U07SBM5MB32

- 👤 Andy Rogers , deleted-U062D2YJ15Y

- 👤 deleted-U05QUSWUJA2 , ahsan

- 👤 Mike Everhart , deleted-U05QUSWUJA2

- 👤 Mark Maniora , deleted-U062D2YJ15Y

- 👤 deleted-U04GZ79CPNG , darrell

- 👤 Mark Hines , deleted-U07JFNH9C7P

- 👤 deleted-U06GD22CLDC , Daniel Schussler

- 👤 deleted-U062D2YJ15Y , Dustin Surwill

- 👤 Cameron Rentch , deleted-U083HCG9AUR

- 👤 Ryan Vaspra , deleted-U083HCG9AUR

- 👤 Nicholas McFadden , deleted-U083HCG9AUR

- 👤 deleted-U04GG7BCZ5M , Nicholas McFadden

- 👤 Malissa Phillips , deleted-U07SBM5MB32

- 👤 deleted-U07SBM5MB32 , IT SUPPORT - Dennis Shaull

- 👤 Nicholas McFadden , deleted-U06CVB2MHRN

- 👤 deleted-U072B7ZV4SH , Malissa Phillips

- 👤 Nsay Y , deleted-U06MDMJ4X96

- 👤 ahsan , deleted-U07SBM5MB32

- 👤 deleted-U072JS2NHRQ , Aidan Celeste

- 👤 Vilma Farrar , deleted-U077J4CPUCC

- 👤 darrell , deleted-U072JS2NHRQ

- 👤 Mike Everhart , deleted-U04J34Q7V4H

- 👤 deleted-U04GG7BCZ5M , ahsan

- 👤 Aidan Celeste , deleted-U08FJ290LUX

- 👤 IT SUPPORT - Dennis Shaull , deleted-U08E306T7SM

- 👤 Aidan Celeste , deleted-U083HCG9AUR

- 👤 deleted-U04R23BEW3V , Greg Owen

- 👤 Dwight Thomas , deleted-U07LSBPN6D8

- 👤 deleted-U06CVB2MHRN , James Turner

- 👤 Dustin Surwill , deleted-U08GMHAPRK2

- 👤 Nick Ward , deleted-U08LK2U7YBW

- 👤 deleted-U04GZ79CPNG , Zekarias Haile

- 👤 Zekarias Haile , deleted-U07SBM5MB32

- 👤 deleted-U06K9EUEPLY , darrell

- 👤 deleted-U04HTA3157W , Amanda Hembree

- 👤 Joe Santana , deleted-U083HCG9AUR

- 👤 Brian Hirst , deleted-U06C7A8PVLJ

- 👤 Cameron Rentch , deleted-U05QUSWUJA2

- 👤 Nicholas McFadden , deleted-U07JFNH9C7P

- 👤 deleted-U04GZ79CPNG , Jehoshua Josue

- 👤 deleted-U072JS2NHRQ , Jehoshua Josue

- 👤 deleted-U06C7A8PVLJ , Richard Schnitzler

- 👤 deleted-U05EFH3S2TA , Richard Schnitzler

- 👤 Mark Maniora , deleted-U06C7A8PVLJ

- 👤 Andy Rogers , deleted-U06F9N9PB2A

- 👤 Ryan Vaspra , deleted-U07CRGCBVJA

- 👤 Andy Rogers , deleted-U06C7A8PVLJ

- 👤 Andy Rogers , deleted-U05EFH3S2TA

- 👤 deleted-U04GZ79CPNG , Chris Krecicki

- 👤 Nicholas McFadden , deleted-U095QBJFFME

- 👤 deleted-U05EFH3S2TA , Carter Matzinger

- 👤 Aidan Celeste , deleted-U08GMHAPRK2

- 👤 deleted-U04GZ79CPNG , Carter Matzinger

- 👤 deleted-U06CR5MH88H , Malissa Phillips

- 👤 deleted-U06C7A8PVLJ , Carter Matzinger

- 👤 Cameron Rentch , deleted-U056TJH4KUL

- 👤 Tony , deleted-U056TJH4KUL

- 👤 Ryan Vaspra , deleted-U056TJH4KUL

- 👤 Ryan Vaspra , deleted-U05CTUBCP7E

- 👤 Ryan Vaspra , deleted-U05CXRE6TBK

- 👤 Adrienne Hester , deleted-U05CTUBCP7E

- 👤 Nicholas McFadden , deleted-U07PQ4H089G

- 👤 Ryan Vaspra , deleted-U069G4WMMD4

- 👤 Dorshan Millhouse , deleted-U08RRDQCPU2

Group Direct Messages

- 👥 Jack Altunyan, Cameron Rentch, Maximus,

- 👥 Cameron Rentch, Ryan Vaspra, Maximus,

- 👥 Cameron Rentch, Ryan Vaspra, Mark Hines,

- 👥 Mike Everhart, Cameron Rentch, Ryan Vaspra,

- 👥 Cameron Rentch, Ryan Vaspra, Tony,

- 👥 Cameron Rentch, john, Caleb Peters,

- 👥 Cameron Rentch, Ryan Vaspra, Kelly C. Richardson, EdS,

- 👥 Cameron Rentch, Ryan Vaspra, Brian Hirst,

- 👥 Cameron Rentch, Ryan Vaspra, Kris Standley,

- 👥 Cameron Rentch, Ryan Vaspra, Andy Rogers,

- 👥 Cameron Rentch, Tony, brettmichael, deleted-U04GU9EUV9A,

- 👥 Ryan Vaspra, Maximus, Mark Hines,

- 👥 Chris, David Temmesfeld, Dorshan Millhouse,

- 👥 Ryan Vaspra, Mark Hines, LaToya Palmer,

- 👥 Mike Everhart, Ryan Vaspra, Maximus,

- 👥 Ryan Vaspra, Kelly C. Richardson, EdS, LaToya Palmer,

- 👥 Mike Everhart, Cameron Rentch, Mark Hines,

- 👥 Cameron Rentch, Andy Rogers, craig,

- 👥 Mike Everhart, Cameron Rentch, john,

- 👥 Jack Altunyan, Ryan Vaspra, Maximus, Caleb Peters,

- 👥 Mike Everhart, Mark Hines, LaToya Palmer,

- 👥 Ryan Vaspra, Andy Rogers, luke,

- 👥 Cameron Rentch, Ryan Vaspra, Andy Rogers, luke,

- 👥 Mike Everhart, Ryan Vaspra, LaToya Palmer,

- 👥 Cameron Rentch, Mark Hines, Andy Rogers,

- 👥 Tony, Andy Rogers, craig,

- 👥 Ryan Vaspra, Andy Rogers, Adrienne Hester,

- 👥 Ryan Vaspra, Kelly C. Richardson, EdS, LaToya Palmer, Adrienne Hester,

- 👥 Ryan Vaspra, LaToya Palmer, Adrienne Hester,

- 👥 Mike Everhart, Ryan Vaspra, Andy Rogers,

- 👥 sean, Cameron Rentch, Andy Rogers,

- 👥 Mike Everhart, deleted-U04GZ79CPNG, deleted-U04KC9L4HRB,

- 👥 Cameron Rentch, Ryan Vaspra, luke,

- 👥 Mike Everhart, Cameron Rentch, deleted-U056TJH4KUL,

- 👥 Mike Everhart, Cameron Rentch, Mark Maniora,

- 👥 Cameron Rentch, Ryan Vaspra, deactivateduser594162, Caleb Peters, deactivateduser497326,

- 👥 Cameron Rentch, Brian Hirst, Mark Maniora,

- 👥 Cameron Rentch, Ryan Vaspra, Andy Rogers, Adrienne Hester,

- 👥 Mike Everhart, Cameron Rentch, Brian Hirst,

- 👥 Ryan Vaspra, Brian Hirst, luke,

- 👥 Cameron Rentch, Tony, deleted-U056TJH4KUL,

- 👥 Ryan Vaspra, deleted-U04GZ79CPNG, deleted-U04KC9L4HRB,

- 👥 silvia, Cameron Rentch, Tony, deleted-U056TJH4KUL, deleted-U05DY7UGM2L,

- 👥 Jack Altunyan, Cameron Rentch, Maximus, Caleb Peters,

- 👥 Cameron Rentch, Ryan Vaspra, deleted-U05DY7UGM2L,

- 👥 deleted-U042MMWVCH2, Adrienne Hester, deleted-U059JKB35RU,

- 👥 Ryan Vaspra, luke, Adrienne Hester,

- 👥 Cameron Rentch, luke, deleted-U05DY7UGM2L,

- 👥 Cameron Rentch, Ryan Vaspra, Andy Rogers, LaToya Palmer, Adrienne Hester,

- 👥 Cameron Rentch, Ryan Vaspra, Andy Rogers, LaToya Palmer,

- 👥 Cameron Rentch, Ryan Vaspra, LaToya Palmer,

- 👥 Cameron Rentch, Tony, Andy Rogers, brettmichael,

- 👥 Cameron Rentch, Andy Rogers, Adrienne Hester,

- 👥 Cameron Rentch, Ryan Vaspra, deleted-U056TJH4KUL,

- 👥 Mike Everhart, Brian Hirst, Dwight Thomas,

- 👥 Cameron Rentch, Andy Rogers, LaToya Palmer, Adrienne Hester,

- 👥 Cameron Rentch, Andy Rogers, LaToya Palmer,

- 👥 Ryan Vaspra, Andy Rogers, deleted-U05DY7UGM2L,

- 👥 Ryan Vaspra, Caleb Peters, Andy Rogers, LaToya Palmer, Adrienne Hester, Lee Zafra,

- 👥 Andy Rogers, LaToya Palmer, Adrienne Hester,

- 👥 Andy Rogers, deleted-U04GZ79CPNG, Greg Owen,

- 👥 Cameron Rentch, Ryan Vaspra, luke, deleted-U05DY7UGM2L,

- 👥 silvia, Cameron Rentch, deactivateduser717106, Rose Fleming, debora,

- 👥 Cameron Rentch, Andy Rogers, debora,

- 👥 Cameron Rentch, Tony, deleted-U05DY7UGM2L,

- 👥 Cameron Rentch, Maximus, Caleb Peters,

- 👥 LaToya Palmer, Adrienne Hester, Greg Owen, Julie-Margaret Johnson,

- 👥 Cameron Rentch, Andy Rogers, Greg Owen,

- 👥 LaToya Palmer, Adrienne Hester, Greg Owen,

- 👥 Cameron Rentch, Ryan Vaspra, Nicholas McFadden,

- 👥 Mike Everhart, Cameron Rentch, Ryan Vaspra, Andy Rogers,

- 👥 Ryan Vaspra, Tony, Andy Rogers, deleted-U04KC9L4HRB,

- 👥 Mike Everhart, Caleb Peters, Dwight Thomas,

- 👥 Cameron Rentch, Andy Rogers, Mark Maniora,

- 👥 Ryan Vaspra, luke, Mark Maniora,

- 👥 Mike Everhart, Ryan Vaspra, deleted-U05DY7UGM2L,

- 👥 Mike Everhart, Jack Altunyan, Dwight Thomas,

- 👥 Cameron Rentch, deleted-U05DY7UGM2L, michaelbrunet,

- 👥 Cameron Rentch, Tony, deleted-U04GU9EUV9A, deleted-U05DY7UGM2L, deleted-U05EFH3S2TA, michaelbrunet,

- 👥 Cameron Rentch, deleted-U056TJH4KUL, deleted-U05DY7UGM2L,

- 👥 Cameron Rentch, Andy Rogers, deleted-U04GZ79CPNG, deleted-U04KC9L4HRB, deleted-U06C7A8PVLJ,

- 👥 Ryan Vaspra, luke, Quint Underwood,

- 👥 Cameron Rentch, Ryan Vaspra, debora,

- 👥 Cameron Rentch, Ryan Vaspra, luke, Mark Maniora, debora,

- 👥 LaToya Palmer, Adrienne Hester, Julie-Margaret Johnson,

- 👥 Mike Everhart, Cameron Rentch, Ryan Vaspra, Michael McKinney,

- 👥 Cameron Rentch, Andy Rogers, deleted-U05DY7UGM2L, debora, Nsay Y, brian580, Altonese Neely,

- 👥 Ryan Vaspra, Tony, debora,

- 👥 Cameron Rentch, Tony, brettmichael,

- 👥 Mike Everhart, Ryan Vaspra, Michael McKinney,

- 👥 Cameron Rentch, Ryan Vaspra, Chris, debora,

- 👥 Cameron Rentch, deleted-U04GU9EUV9A, deleted-U04HFGHL4RW, deleted-U05EFH3S2TA,

- 👥 Cameron Rentch, debora, brian580, Altonese Neely,

- 👥 Cameron Rentch, deleted-U04HFGHL4RW, deleted-U05EFH3S2TA,

- 👥 Cameron Rentch, deleted-U04GZ79CPNG, deleted-U04HFGHL4RW, deleted-U04KC9L4HRB, michaelbrunet, deleted-U067A4KAB9U, rachel,

- 👥 Jack Altunyan, Cameron Rentch, Caleb Peters,

- 👥 debora, brian580, Altonese Neely,

- 👥 Cameron Rentch, deleted-U04GU9EUV9A, deleted-U04HFGHL4RW, deleted-U04KC9L4HRB, deleted-U05EFH3S2TA, michaelbrunet,

- 👥 Ryan Vaspra, Nicholas McFadden, James Scott,

- 👥 Cameron Rentch, Andy Rogers, debora, brian580, Altonese Neely,

- 👥 Cameron Rentch, michaelbrunet, deleted-U067A4KAB9U,

- 👥 Tony, debora, brian580, Altonese Neely,

- 👥 Cameron Rentch, deleted-U04KC9L4HRB, michaelbrunet, deleted-U067A4KAB9U, rachel,

- 👥 Ryan Vaspra, debora, Lee Zafra, Nsay Y,

- 👥 Cameron Rentch, Maximus, Andy Rogers,

- 👥 Cameron Rentch, Joe Santana, michaelbrunet,

- 👥 Ryan Vaspra, Caleb Peters, debora, Nsay Y,

- 👥 Cameron Rentch, Tony, Andy Rogers, deleted-U04GU9EUV9A,

- 👥 Ryan Vaspra, Andy Rogers, deleted-U069G4WMMD4,

- 👥 Mike Everhart, Dwight Thomas, Michael McKinney,

- 👥 Ryan Vaspra, Andy Rogers, Adrienne Hester, Julie-Margaret Johnson, Lee Zafra,

- 👥 Jack Altunyan, Cameron Rentch, Michael McKinney,

- 👥 Cameron Rentch, Andy Rogers, debora, Altonese Neely,

- 👥 Ryan Vaspra, Tony, deleted-U04KC9L4HRB, deleted-U05EFH3S2TA,

- 👥 Ryan Vaspra, luke, deleted-U04GZ79CPNG, deleted-U04KC9L4HRB,

- 👥 Adrienne Hester, korbin, Lee Zafra,

- 👥 Cameron Rentch, Ryan Vaspra, debora, Nsay Y, brian580, Altonese Neely,

- 👥 Ryan Vaspra, Brian Hirst, luke, Nicholas McFadden,

- 👥 Ryan Vaspra, Andy Rogers, luke, Altonese Neely,

- 👥 Mike Everhart, Ryan Vaspra, Brian Hirst, luke, Nicholas McFadden, Quint Underwood, James Scott,

- 👥 Cameron Rentch, Ryan Vaspra, luke, Michael McKinney,

- 👥 Cameron Rentch, deleted-U04GZ79CPNG, deleted-U04HFGHL4RW, deleted-U04KC9L4HRB,

- 👥 Tony, deleted-U05M3DME3GR, debora,

- 👥 Mike Everhart, Cameron Rentch, deleted-U04GZ79CPNG, deleted-U04HFGHL4RW, deleted-U04KC9L4HRB, michaelbrunet, deleted-U067A4KAB9U, rachel,

- 👥 Mark Maniora, Dwight Thomas, Nicholas McFadden, Greg Owen,

- 👥 Cameron Rentch, deleted-U04HFGHL4RW, deleted-U04KC9L4HRB, michaelbrunet,

- 👥 Ryan Vaspra, Tony, Andy Rogers, luke, deleted-U04GU9EUV9A, deleted-U04KC9L4HRB,

- 👥 Andy Rogers, deleted-U05DY7UGM2L, Nsay Y,

- 👥 Cameron Rentch, Andy Rogers, deleted-U04GU9EUV9A, deleted-U04GZ79CPNG, deleted-U04KC9L4HRB, deleted-U06AYDQ4WVA,

- 👥 Cameron Rentch, deleted-U04HFGHL4RW, michaelbrunet,

- 👥 Cameron Rentch, Ryan Vaspra, parsa,

- 👥 Mark Maniora, Nicholas McFadden, Greg Owen,

- 👥 Cameron Rentch, debora, Nsay Y,

- 👥 Cameron Rentch, deleted-U05DY7UGM2L, michaelbrunet, deleted-U067A4KAB9U, Nsay Y,

- 👥 Cameron Rentch, Ryan Vaspra, Tony, Andy Rogers, LaToya Palmer, deleted-U04GU9EUV9A,

- 👥 Cameron Rentch, Andy Rogers, patsy,

- 👥 LaToya Palmer, Adrienne Hester, Dwight Thomas,

- 👥 Cameron Rentch, debora, Altonese Neely,

- 👥 Cameron Rentch, Ryan Vaspra, Kelly C. Richardson, EdS, Andy Rogers, LaToya Palmer, Adrienne Hester, korbin, deleted-U069G4WMMD4,

- 👥 Cameron Rentch, Ryan Vaspra, Tony, debora, brian580, Altonese Neely,

- 👥 Mike Everhart, Cameron Rentch, Ryan Vaspra, parsa,

- 👥 Ryan Vaspra, Andy Rogers, korbin,

- 👥 Mike Everhart, Cameron Rentch, Ryan Vaspra, Dwight Thomas,

- 👥 Cameron Rentch, Dorshan Millhouse, Michael McKinney,

- 👥 Ryan Vaspra, Adrienne Hester, korbin,

- 👥 Ryan Vaspra, LaToya Palmer, Adrienne Hester, korbin,

- 👥 Ryan Vaspra, Tony, deleted-U04R23BEW3V, korbin,

- 👥 Mike Everhart, Cameron Rentch, Ryan Vaspra, Fabian De Simone,

- 👥 Nsay Y, brian580, Altonese Neely,

- 👥 Jack Altunyan, Cameron Rentch, Ryan Vaspra, Maximus, Caleb Peters, debora, Altonese Neely,

- 👥 Jack Altunyan, Cameron Rentch, Ryan Vaspra, debora, Altonese Neely,

- 👥 Ryan Vaspra, Andy Rogers, deleted-U05CTUBCP7E,

- 👥 brian580, Altonese Neely, parsa,

- 👥 Cameron Rentch, Ryan Vaspra, michaelbrunet, James Scott,

- 👥 Ryan Vaspra, Andy Rogers, patsy,

- 👥 Mike Everhart, Jack Altunyan, Ryan Vaspra, parsa,

- 👥 Andy Rogers, brian580, Altonese Neely,

- 👥 deleted-U04HFGHL4RW, brian580, Altonese Neely,

- 👥 Mike Everhart, Dwight Thomas, Greg Owen,

- 👥 Cameron Rentch, Ryan Vaspra, Andy Rogers, deleted-U04KC9L4HRB, Adrienne Hester, korbin, deleted-U067A4KAB9U,

- 👥 Ryan Vaspra, Andy Rogers, Adrienne Hester, korbin,

- 👥 Adrienne Hester, deleted-U05CTUBCP7E, deleted-U069G4WMMD4,

- 👥 Ryan Vaspra, Tony, Andy Rogers, deleted-U04GZ79CPNG, deleted-U04KC9L4HRB, Adrienne Hester, deleted-U067A4KAB9U,

- 👥 Cameron Rentch, deleted-U04HFGHL4RW, deleted-U04R23BEW3V,

- 👥 Ryan Vaspra, brian580, Altonese Neely,

- 👥 Cameron Rentch, Mark Hines, deleted-U04GU9EUV9A,

- 👥 Cameron Rentch, Rose Fleming, brian580, Altonese Neely,

- 👥 sean, Ryan Vaspra, luke,

- 👥 Cameron Rentch, Ryan Vaspra, Lee Zafra,

- 👥 Cameron Rentch, deleted-U05EFH3S2TA, Nicholas McFadden,

- 👥 Andy Rogers, Adrienne Hester, deleted-U04R23BEW3V, Greg Owen, patsy,

- 👥 Ryan Vaspra, Brian Hirst, luke, Mark Maniora,

- 👥 David Temmesfeld, Dorshan Millhouse, LaToya Palmer, Adrienne Hester,

- 👥 Cameron Rentch, deleted-U04GU9EUV9A, deleted-U04HFGHL4RW, deleted-U04R23BEW3V,

- 👥 Mark Hines, LaToya Palmer, Adrienne Hester,

- 👥 deleted-U04GZ79CPNG, Greg Owen, deleted-U06AYDQ4WVA, deleted-U06C7A8PVLJ, deleted-U06N28XTLE7,

- 👥 LaToya Palmer, Adrienne Hester, Mark Maniora, Dwight Thomas, Nicholas McFadden, Julie-Margaret Johnson, korbin, Lee Zafra,

- 👥 Ryan Vaspra, luke, parsa,

- 👥 Cameron Rentch, Chris, Andy Rogers, Adrienne Hester,

- 👥 Mike Everhart, Cameron Rentch, Ryan Vaspra, deleted-U05DY7UGM2L,

- 👥 Mark Maniora, Dwight Thomas, Greg Owen,

- 👥 Ryan Vaspra, deleted-U055HQT39PC, James Scott,

- 👥 Brian Hirst, luke, Mark Maniora,

- 👥 Cameron Rentch, deleted-U04GZ79CPNG, deleted-U05EFH3S2TA, deleted-U067A4KAB9U,

- 👥 deleted-U04GZ79CPNG, deleted-U04KC9L4HRB, korbin,

- 👥 Mike Everhart, deleted-U055HQT39PC, Dwight Thomas,

- 👥 Ryan Vaspra, Brian Hirst, Nicholas McFadden,

- 👥 Cameron Rentch, deleted-U04GZ79CPNG, deleted-U04HFGHL4RW, deleted-U055HQT39PC, deleted-U05EFH3S2TA, michaelbrunet, deleted-U067A4KAB9U,

- 👥 Cameron Rentch, Tony, brettmichael, deleted-U04GU9EUV9A, deleted-U05EFH3S2TA,

- 👥 Cameron Rentch, Tony, Andy Rogers, debora,

- 👥 Cameron Rentch, Brian Hirst, luke, Mark Maniora,

- 👥 Cameron Rentch, deleted-U04GZ79CPNG, deleted-U05EFH3S2TA,

- 👥 Cameron Rentch, deleted-U04GZ79CPNG, deleted-U05EFH3S2TA, michaelbrunet, deleted-U067A4KAB9U,

- 👥 Ryan Vaspra, LaToya Palmer, Adrienne Hester, Julie-Margaret Johnson, Lee Zafra, Melanie Macias,

- 👥 Cameron Rentch, Maximus, Dorshan Millhouse,

- 👥 Ryan Vaspra, Brian Hirst, Andy Rogers, luke, Mark Maniora, brian580, Altonese Neely, patsy,

- 👥 Cameron Rentch, Ryan Vaspra, deleted-U056TJH4KUL, deleted-U05DY7UGM2L, James Scott,

- 👥 LaToya Palmer, Adrienne Hester, Mark Maniora, Dwight Thomas, Greg Owen, Julie-Margaret Johnson, korbin, Lee Zafra,

- 👥 Cameron Rentch, deleted-U04GU9EUV9A, deleted-U04HFGHL4RW, michaelbrunet, deleted-U067A4KAB9U,

- 👥 Ryan Vaspra, Mark Maniora, brian580, Altonese Neely,

- 👥 Andy Rogers, LaToya Palmer, Adrienne Hester, Mark Maniora, Dwight Thomas, Greg Owen, Julie-Margaret Johnson, korbin, Lee Zafra,

- 👥 Ryan Vaspra, Brian Hirst, luke, Nicholas McFadden, brian580, Altonese Neely,

- 👥 Cameron Rentch, Ryan Vaspra, brian580, Altonese Neely,